Our mirror neuron course is wrapping up soon. For the final meeting (next monday) we decided that we would all go to the literature and pick our favorite mirror neuron paper and report back to the class on what we learn. I know some students are looking into papers talking about language evolution, others are looking at clinical applications (e.g., autism), so it should be interesting. If any TB readers would like to summarize a favorite paper, please feel free to post a comment!

So what did we learn in this course? One thing I learned is that it is completely unclear what real (i.e., macaque) mirror neurons are doing. There is no evidence whatsoever that mirror neurons in macaque support action understanding. In fact, Rizzolatti has stated that this hypothesis is impossible to test using standard methods (lesion). Might MNs support imitation? Well, macaques are supposed to lack the ability to imitate. If this is true -- and given recent reports the jury may still be out on this -- MNs cannot support imitation. I suggest they simply reflect good old-fashioned sensory-motor associations.

On the human front, we also learned that there is no evidence that the "mirror system" (in quotes because the response characteristics of this system are different from real mirror neurons) supports action understanding, and in the speech domain there is, in fact, good evidence against the view that the "mirror system" is the basis for speech recognition. The "mirror system" may support gesture imitation in humans, which need not involve understanding. But this is just another way of saying that the brain can establish sensory-motor associations, which is certainly not news.

So if there is NO evidence supporting the most flashy claim regarding mirror neurons (action understanding), why has this system gotten so much attention? And why has it been so widely and blindly accepted as truth? I don't know, but I can speculate:

1. The idea has intuitive appeal. Since we all understand our own actions (or at least think we do -- I bet this is debatable), it seems reasonable that we might understand other people's actions by relating them to our own. Be wary of hypotheses with intuitive appeal: they require much less empirical support to convince people that they are true.

2. The idea simplifies a complex issue. Semantics is complex. (See our previous blog discussions.) If we can explain how we "understand" via the response properties of a small population of cells in Broca's area, this avoids all kinds of messy semantic complications.

3. There is a cellular grounding. If the only data regarding the mirror system came from human functional imaging studies, I bet no one would believe it. The fact that a cell in the monkey F5 shows "mirror" properties provides a neurophysiological anchor for human-related speculation, and this goes a long way towards lending credibility.

4. There is a cognitive grounding. The motor theory of speech perception provided an independently motivated theory (even if it is wrong) to back up the general concept of a motor theory of action understanding. It's no accident that the motor theory was referred to in the earliest empirical MN papers.

5. It is easily generalizable. If we understand actions of others by relating them to our own, we might understand speech, emotions, etc., in the same way. Now we have a cellular basis for all sorts of complex systems ranging from speech to empathy, and potential explanations for complex disorders such as autism.

You put all these plusses together and you have a theory that people are willing to impulse-buy without evidence, and without thinking too hard about it.

So the next time you review a paper that states, "This [mirror neuron] research has shown that areas of the brain which subserve motor action production are also involved in action perception and comprehension." (Saygin et al., 2004, Neuropsychologia, 42:1788-1804, p. 1802), note in your review that this claim is speculative and unsupported by empirical evidence. If the authors want to include such a statement, this is fine, but it should be prefaced by "Although there is no evidence to support the theory, mirror neuron research has been used as a basis to speculate that..." Otherwise, the innocent reader will assume that there is an empirical backing to the claim and the speculation will continue to be propagated in the literature as fact. If we take this attitude, the field will be better situated to test the hypothesis rigorously, and if the evidence is supportive, the theory can win over even the skeptics like me. If it is not supported, then we can figure out what this system might be doing. Either way, we make progress.

News and views on the neural organization of language moderated by Greg Hickok and David Poeppel

Wednesday, May 28, 2008

Thursday, May 22, 2008

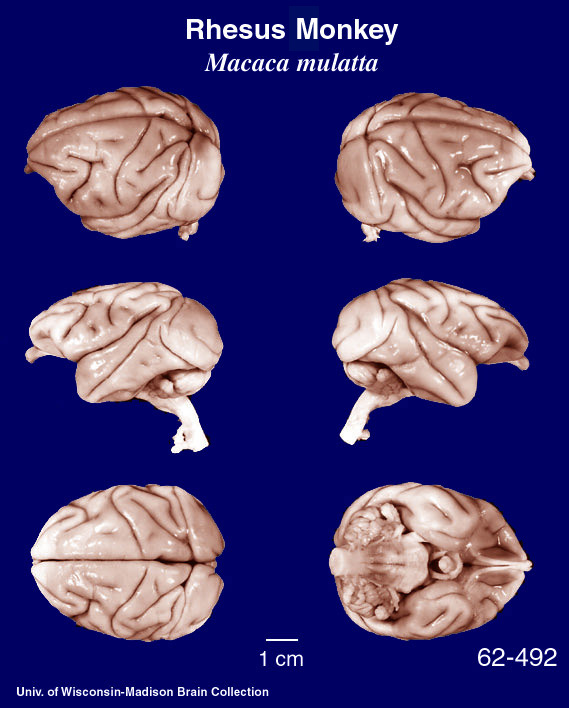

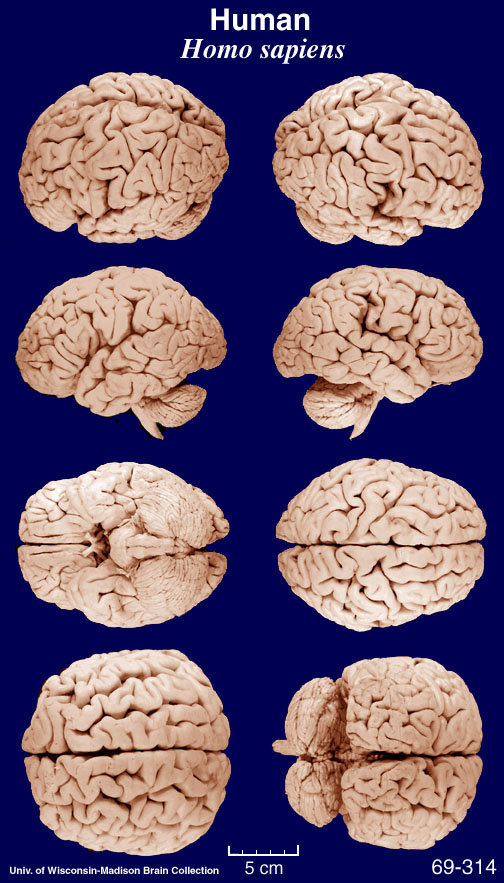

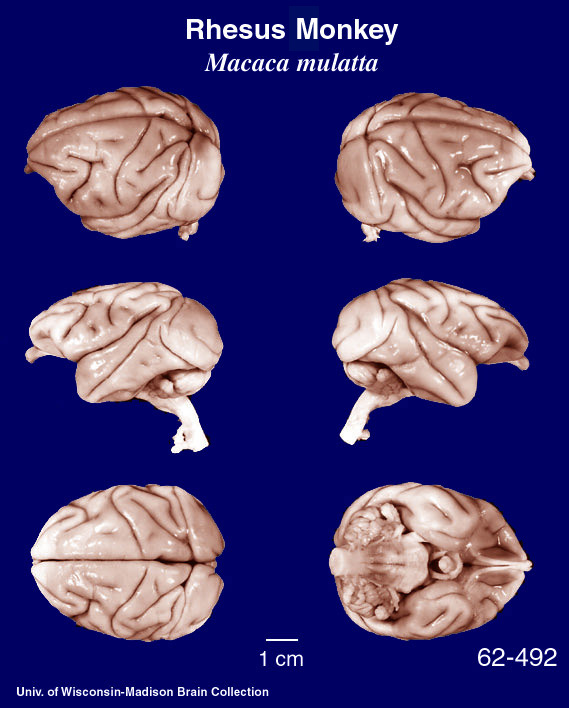

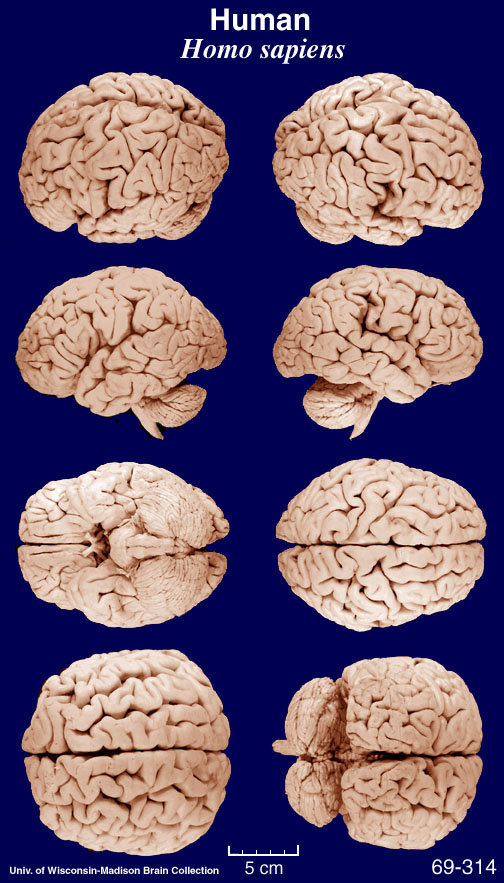

Comparative neuroscience: Macaque vs. Human brains

We've filled up a fair amount of blog space lately talking about comparative neuroscience -- using functional anatomic data from one species (macaque monkey) to make inferences about the functional organization of neural systems in another (human). I am a big fan of comparative approaches. We can learn a lot from animal models. However, it is important to remember that we are dealing with systems which are not necessarily homologous. This is particularly true when we start talking about higher cognitive functions.

Given that the macaque is a favorite model for much human neuroscience generalization, it is instructive to remind ourselves that the macaque and human brains are rather different on gross examination as the images below make clear. BTW, this is a Macaca mulatta specimen -- a species used in much primate neuroscience research, but not the species in which mirror neurons were discovered which is Macaca nemestrina. But you get the idea.

These images came from the Comparative Mammalian Brain Collections webpage.

Given that the macaque is a favorite model for much human neuroscience generalization, it is instructive to remind ourselves that the macaque and human brains are rather different on gross examination as the images below make clear. BTW, this is a Macaca mulatta specimen -- a species used in much primate neuroscience research, but not the species in which mirror neurons were discovered which is Macaca nemestrina. But you get the idea.

These images came from the Comparative Mammalian Brain Collections webpage.

Wednesday, May 21, 2008

Gesture discrimination in patients with limb apraxia -- evidence for the mirror system?

As hinted at previously, the recent paper by Pazzaglia et al. (2008, J. Neuroscience, 28:3030-41) provides the best evidence I've seen in support of the mirror neuron theory of action understanding. This is a very nice paper, and a brilliant effort to assess the neural basis of gesture discrimination deficits. Unfortunately, there are some complications.

Here's what makes the paper look strong:

Subjects: 41 CVA patients were studied, 33 with left brain damage (LBD) and 8 with right brain damage (RBD). 21 of the LBD subjects were classified as having limb apraxia on assessment.

Stims and task: Subjects viewed video clips of an actor performing transitive or intransitive meaningful gestures. These gestures were performed either correctly or incorrectly. So for example, a correct transitive gesture might show the actor strumming a guitar (with an actual guitar), while the incorrect gesture would show the actor strumming a flute (a semantically related foil), or a broom (an unrelated foil). Subjects made correct/incorrect judgments on each trial.

Results: Lesion analysis revealed some amazing-looking data. This data looks so good, that I thought we'd have to change the blog title to Talking Mirror Neurons! Check it out:

Panel A shows the lesion distribution of patients with limb apraxia (LA+) on the left, and without limb apraxia (LA-) on the right. Notice that the LA+ patients have lesions centered around in the inferior frontal gyrus and inferior parietal lobe.

Panel B shows the subtraction of LA+ vs. LA- which highlights the involvement of posterior frontal areas and inferior parietal areas in LA+ patients. This is consistent with much previous data. So far, so good.

Next they analyzed gesture discrimination performance in a variety of ways.

1. LA+ patients performed significantly worse on gesture discrimination than LA- patients.

2. Significant positive correlations were found between measures of gesture production and gesture discrimination "demonstrating a clear relationship between performing and understanding meaningful gestures" (p. 3034).

3. A cluster analysis was performed on the LA+ patients based on their discrimination performance. This analysis revealed that not all LA+ patients had gesture discrimination deficits, however. In fact seven of the 21 LA+ patients were classified as having no deficit in understanding gestures. So while there is a correlation between production and understanding, these abilities do dissociation as much previous work has shown.

Now on to the really amazing data -- the lesion analysis comparing the LA+ patients with vs. without gesture recognition deficits (+GRD vs. -GRD):

Panel A shows the lesion distribution of these two groups, and Panel B shows the subtraction. It couldn't come out cleaner: patients with limb apraxia and gesture discrimination deficits (LA+[GRD+]) have lesions primarily affecting the inferior posterior frontal gyrus, whereas patients with limp apraxia but without gesture discrimination deficits (LA+[GRD-]) have lesions affecting the posterior parietal lobe.

Further, voxel-based lesion-symptom mapping analysis in all 33 LBD patients revealed that performance on the gesture discrimination task correlated with damage to voxels in the inferior frontal gyrus:

Wow! Mirror neurons rule! Is this solid evidence for the role of the posterior IFG in gesture understanding or what!

Maybe "what." Here's two reasons to be suspicious of the findings.

1. The task effectively used a signal detection type paradigm: view a stimulus and decide whether it is signal (correct gesture) or noise (incorrect gesture). The proper way to analyze such data is to calculate d-prime, as this provides an unbiased measure of discriminability. Unfortunately, most of the behavioral analyses, and all of the lesion analyses used uncorrected error rates: a score of 1 was given for hits and for correct rejections, and zeros were given for false alarms and misses. This scoring produces biases, particularly when there are more "noise" trials than "signal" trials, as there were in this study which used a 2:1 noise:signal ratio.

Here's a demonstration using an extreme scenario. Assume three subjects cannot discriminate signal from noise at all but have different response biases:

Subject #1: neutral response bias

10 correct trials: neutral response bias = 5/10 correct

20 incorrect trials: no response bias = 10/20 correct

Overall score = 15/30 correct = 50% accuracy (a valid result)

Subject #2: 100% "yes" response bias

10 correct trials: 100% "yes" response bias = 10/10 correct

20 incorrect trials: 100% "yes" response bias = 0/20 correct

Overall score = 10/30 correct = 33% correct

Subject #3: 100% "no" response bias

10 correct trials: 100% "no" response bias = 0/10 correct

20 incorrect trials: 100% "no" response bias = 20/20 correct

Overall score = 20/30 correct = 67% correct

So you get different accuracy scores depending on the subject's response bias, independently of his/her actual ability to discriminate signal from noise. Of course these biases may not be THIS extreme in practice, but they will find their way into the data, and taint the results -- this is the reason for the d-prime statistic. Is it possible that the frontal and parietal regions are correlating with different response biases? Some people have argued that frontal cortex is important for response selection...

Upshot: the lesion analyses reported in this paper absolutely have to be redone using d-prime measures instead of overall accuracy. Until then, we cannot be sure how much of these findings are driven by response bias.

Some of you are still very confident that even with d-prime, the results will look similar. I wonder myself whether it will change much. But there's another reason to be suspicious...

2. Figure 5. This figure shows the correlation between the percentage of lesioned tissue in the IFG and overall score on gesture recognition, broken down by gesture type, transitive and intransitive. GRD+ and GRD- patients are included in these correlations. Both correlations are reported as significant, with r-values > . 55; not bad. But it is clear from looking at the graphs that the effect is driven by the -GRD group, who all cluster together.

But isn't that part of point? Patients without gesture recognition deficits don't have much damage to IFG, so they should cluster together, right? True, but it should also be the case that for those patients WITH gesture recognition deficits there should still be a strong correlation with amount of lesioned tissue in IFG (assuming there's enough variance in the lesioned tissue, which there is). However, if you remove the -GRD patients (the square symbols), it looks as though the regression line would be completely flat! This is true even thought the percentage of damaged tissue in the +GRD group ranges from ~5% to ~55%. In fact, eyeballing the top graph you can see that the three patients with the LEAST amount of damage to IFG (~to the amount damaged in the -GRD group!) are performing the same as the four patients who have the MOST IFG damage! Further, all the patients in between these two extremes are distributed in a nebulous cloud that kind of looks like an "X" that is equally wide as it is tall (can you find the cross-over double-dissociation in the X?) If IFG was the critical substrate for gesture recognition, one would expect some kind of pattern here, yet there is none.

It seems quite clear that IFG damage is NOT predicting gesture discrimination deficits. Something else is driving the effect that shows up in the beautiful lesion analyses.

Overall conclusion: Pazzaglia et al. made a valiant effort, and should be commended for looking at their data from so many angles, and for reporting their findings openly and straightforwardly. As it stands, however, the data do not support the mirror neuron theory of action understanding. In fact, the data seem to indicate (i) action production and action understanding dissociate, and (ii) damage to the presumed human homologue of monkey area F5 does not correlate with action understanding deficits.

I would love to see the follow up paper that re-analyzes all the data using d-primes. Maybe then we'll have at least one piece of evidence supporting the mirror neuron theory of action understanding. To date, there isn't any evidence in support of the theory.

Here's what makes the paper look strong:

Subjects: 41 CVA patients were studied, 33 with left brain damage (LBD) and 8 with right brain damage (RBD). 21 of the LBD subjects were classified as having limb apraxia on assessment.

Stims and task: Subjects viewed video clips of an actor performing transitive or intransitive meaningful gestures. These gestures were performed either correctly or incorrectly. So for example, a correct transitive gesture might show the actor strumming a guitar (with an actual guitar), while the incorrect gesture would show the actor strumming a flute (a semantically related foil), or a broom (an unrelated foil). Subjects made correct/incorrect judgments on each trial.

Results: Lesion analysis revealed some amazing-looking data. This data looks so good, that I thought we'd have to change the blog title to Talking Mirror Neurons! Check it out:

Panel A shows the lesion distribution of patients with limb apraxia (LA+) on the left, and without limb apraxia (LA-) on the right. Notice that the LA+ patients have lesions centered around in the inferior frontal gyrus and inferior parietal lobe.

Panel B shows the subtraction of LA+ vs. LA- which highlights the involvement of posterior frontal areas and inferior parietal areas in LA+ patients. This is consistent with much previous data. So far, so good.

Next they analyzed gesture discrimination performance in a variety of ways.

1. LA+ patients performed significantly worse on gesture discrimination than LA- patients.

2. Significant positive correlations were found between measures of gesture production and gesture discrimination "demonstrating a clear relationship between performing and understanding meaningful gestures" (p. 3034).

3. A cluster analysis was performed on the LA+ patients based on their discrimination performance. This analysis revealed that not all LA+ patients had gesture discrimination deficits, however. In fact seven of the 21 LA+ patients were classified as having no deficit in understanding gestures. So while there is a correlation between production and understanding, these abilities do dissociation as much previous work has shown.

Now on to the really amazing data -- the lesion analysis comparing the LA+ patients with vs. without gesture recognition deficits (+GRD vs. -GRD):

Panel A shows the lesion distribution of these two groups, and Panel B shows the subtraction. It couldn't come out cleaner: patients with limb apraxia and gesture discrimination deficits (LA+[GRD+]) have lesions primarily affecting the inferior posterior frontal gyrus, whereas patients with limp apraxia but without gesture discrimination deficits (LA+[GRD-]) have lesions affecting the posterior parietal lobe.

Further, voxel-based lesion-symptom mapping analysis in all 33 LBD patients revealed that performance on the gesture discrimination task correlated with damage to voxels in the inferior frontal gyrus:

Wow! Mirror neurons rule! Is this solid evidence for the role of the posterior IFG in gesture understanding or what!

Maybe "what." Here's two reasons to be suspicious of the findings.

1. The task effectively used a signal detection type paradigm: view a stimulus and decide whether it is signal (correct gesture) or noise (incorrect gesture). The proper way to analyze such data is to calculate d-prime, as this provides an unbiased measure of discriminability. Unfortunately, most of the behavioral analyses, and all of the lesion analyses used uncorrected error rates: a score of 1 was given for hits and for correct rejections, and zeros were given for false alarms and misses. This scoring produces biases, particularly when there are more "noise" trials than "signal" trials, as there were in this study which used a 2:1 noise:signal ratio.

Here's a demonstration using an extreme scenario. Assume three subjects cannot discriminate signal from noise at all but have different response biases:

Subject #1: neutral response bias

10 correct trials: neutral response bias = 5/10 correct

20 incorrect trials: no response bias = 10/20 correct

Overall score = 15/30 correct = 50% accuracy (a valid result)

Subject #2: 100% "yes" response bias

10 correct trials: 100% "yes" response bias = 10/10 correct

20 incorrect trials: 100% "yes" response bias = 0/20 correct

Overall score = 10/30 correct = 33% correct

Subject #3: 100% "no" response bias

10 correct trials: 100% "no" response bias = 0/10 correct

20 incorrect trials: 100% "no" response bias = 20/20 correct

Overall score = 20/30 correct = 67% correct

So you get different accuracy scores depending on the subject's response bias, independently of his/her actual ability to discriminate signal from noise. Of course these biases may not be THIS extreme in practice, but they will find their way into the data, and taint the results -- this is the reason for the d-prime statistic. Is it possible that the frontal and parietal regions are correlating with different response biases? Some people have argued that frontal cortex is important for response selection...

Upshot: the lesion analyses reported in this paper absolutely have to be redone using d-prime measures instead of overall accuracy. Until then, we cannot be sure how much of these findings are driven by response bias.

Some of you are still very confident that even with d-prime, the results will look similar. I wonder myself whether it will change much. But there's another reason to be suspicious...

2. Figure 5. This figure shows the correlation between the percentage of lesioned tissue in the IFG and overall score on gesture recognition, broken down by gesture type, transitive and intransitive. GRD+ and GRD- patients are included in these correlations. Both correlations are reported as significant, with r-values > . 55; not bad. But it is clear from looking at the graphs that the effect is driven by the -GRD group, who all cluster together.

But isn't that part of point? Patients without gesture recognition deficits don't have much damage to IFG, so they should cluster together, right? True, but it should also be the case that for those patients WITH gesture recognition deficits there should still be a strong correlation with amount of lesioned tissue in IFG (assuming there's enough variance in the lesioned tissue, which there is). However, if you remove the -GRD patients (the square symbols), it looks as though the regression line would be completely flat! This is true even thought the percentage of damaged tissue in the +GRD group ranges from ~5% to ~55%. In fact, eyeballing the top graph you can see that the three patients with the LEAST amount of damage to IFG (~to the amount damaged in the -GRD group!) are performing the same as the four patients who have the MOST IFG damage! Further, all the patients in between these two extremes are distributed in a nebulous cloud that kind of looks like an "X" that is equally wide as it is tall (can you find the cross-over double-dissociation in the X?) If IFG was the critical substrate for gesture recognition, one would expect some kind of pattern here, yet there is none.

It seems quite clear that IFG damage is NOT predicting gesture discrimination deficits. Something else is driving the effect that shows up in the beautiful lesion analyses.

Overall conclusion: Pazzaglia et al. made a valiant effort, and should be commended for looking at their data from so many angles, and for reporting their findings openly and straightforwardly. As it stands, however, the data do not support the mirror neuron theory of action understanding. In fact, the data seem to indicate (i) action production and action understanding dissociate, and (ii) damage to the presumed human homologue of monkey area F5 does not correlate with action understanding deficits.

I would love to see the follow up paper that re-analyzes all the data using d-primes. Maybe then we'll have at least one piece of evidence supporting the mirror neuron theory of action understanding. To date, there isn't any evidence in support of the theory.

Monday, May 19, 2008

Apraxia, Gesture Recognition, and Mirror Neurons

This week in our Mirror Neuron course we looked over some papers in the apraxia literature, which seemed to hold the promise of providing evidence in support of the MN theory of action understanding. Specifically, based on some of the abstracts, I thought we would find (i) that disorders to gesture production would be strongly associated with disorders of gesture understanding, and (ii) that damage to the frontal (putative) mirror neuron system should be associated with these production/comprehension disorders.

Instead, and despite the conclusions of some of the authors, the papers we read made a pretty decent case against the MN theory of action understanding.

First of all, it is very clear that deficits in gesture production/imitation dissociate from the ability to comprehend gestures. For example, Table 4 of Tessari et al. 2007 (Brain, 130:1111-26) shows data that includes eight (8) patients (out of a sample of 32 left hemisphere patients), who are performing at 60% correct or less on action imitation, while recognizing actions at 90% or better. Case 27, is a dramatic example, with only 10% correct imitations of meaningful actions and 100% correct on action recognition. It's possible, though, that imitation deficits in such cases result from some relatively peripheral motor control mechanism that is outside the mirror system proper. This is reasonable, but then I have to wonder whether data from say, motor evoked potentials, which are touted as strong evidence for the mirror system (and which might be considered rather peripheral!), should now be discounted for being peripheral to the mirror system. In other words, you can't point to a very peripheral response like MEPs and call it evidence for mirror neurons, and then turn around and explain imitation deficits caused by (non-M1) cortical damage as arising from systems that are peripheral to the mirror system.

In other, other words, it's time to be explicit about what components of the motor system are part of the mirror system. As it stands, "mirror neuron theory" is effectively untestable because any result just gets folded into the mythology: humans show "mirror" responses for pantomimed gestures (unlike macaque F5 cells)? That's because humans are more sophisticated than monkeys. The STS responds just as selectively (more so!) to perceived actions than F5? That's because it inherits its mirror properties from the real mirror system. Passive viewing of non-action stimuli (rectangles with dots in them) activates the mirror system? That's because of motor imagery associated with the non-action stimuli. Mirror neuron theorizing is starting to remind me of one of those house-of-mirrors attractions at the county fair: Mirrors everywhere, but mostly reflecting nothing.

The STS responds just as selectively (more so!) to perceived actions than F5? That's because it inherits its mirror properties from the real mirror system. Passive viewing of non-action stimuli (rectangles with dots in them) activates the mirror system? That's because of motor imagery associated with the non-action stimuli. Mirror neuron theorizing is starting to remind me of one of those house-of-mirrors attractions at the county fair: Mirrors everywhere, but mostly reflecting nothing.

But I digress... Let's focus on the two papers that seem to make the best case for action understanding deficits associated with lesions to the inferior frontal gyrus.

Saygin et al. studied the ability of aphasic patients to understand action-related pictures. Ok, not exactly action understanding (pictures are static), but let's assume that these pictures induced action percepts/concepts. Subjects viewed actions with the object of the action removed, such as a boy licking an ice cream cone, but with no ice cream cone in his hand. Subjects then had to pick the matching object out of an array of two pictured objects which included the target (ice cream cone) and a distractor. Distractors included (on different trials) semantically related items (cake), "affordance" related items (a bouquet of flowers which is held in a similar manner but not normally licked), or an unrelated item (rooster). A matched verbal version of the task was also administered in which subjects read sentence fragments ("He is licking the _____") and had to pick the correct picture, as above.

The results were quite interesting: (i) performance on the two task was completely uncorrelated (after the one outlier patient was removed), showing that action understanding is domain-specific. (ii) lesion analysis revealed that deficits on the pictorial task were correlated with lesions in the inferior frontal gyrus, whereas deficits on the linguistic task were correlated with a different distribution of lesions that was more posterior and involved the anterior temporal lobe (interesting for other reasons). So both behavioral and brain data show these two tasks dissociate, and pictorial action understanding is associated with the frontal "mirror system." The results were interpreted as support for the mirror neuron theory of action understanding.

But this is an odd theoretical position. Apparently, the ability to understand actions generally, is dependent on the sensory signals that access that action-concept information. That is, having a deficit in the ability to understand actions from action depictions, does not prevent you from accessing action-concepts via a verbal route. Put another way, damage to your mirror neuron system (the frontal lesions associated with pictorial action understanding deficits) leaves action-concepts (action understanding) intact, as evidenced by the fact that you can access them via another input route. Therefore, the representation of action meaning (the understanding part) is not in the motor representation, but is somewhere else. At most the motor system may facilitate access to the meaning of actions. But these data show that the semantics of action is NOT inherent to the motor system. Alternatively, the correlation with frontal lobe structures for this action understanding task, may have more to do with making complex inferences from static ambiguous pictures (have a look at the sample stimulus item in the paper -- the "licking boy" could just as easily be smiling real big, or have a fat lip).

Enough for now. I'll comment in the next post on the strongest evidence we've seen so far for the MN theory of action understanding. (Turns out its not so strong.)

Instead, and despite the conclusions of some of the authors, the papers we read made a pretty decent case against the MN theory of action understanding.

First of all, it is very clear that deficits in gesture production/imitation dissociate from the ability to comprehend gestures. For example, Table 4 of Tessari et al. 2007 (Brain, 130:1111-26) shows data that includes eight (8) patients (out of a sample of 32 left hemisphere patients), who are performing at 60% correct or less on action imitation, while recognizing actions at 90% or better. Case 27, is a dramatic example, with only 10% correct imitations of meaningful actions and 100% correct on action recognition. It's possible, though, that imitation deficits in such cases result from some relatively peripheral motor control mechanism that is outside the mirror system proper. This is reasonable, but then I have to wonder whether data from say, motor evoked potentials, which are touted as strong evidence for the mirror system (and which might be considered rather peripheral!), should now be discounted for being peripheral to the mirror system. In other words, you can't point to a very peripheral response like MEPs and call it evidence for mirror neurons, and then turn around and explain imitation deficits caused by (non-M1) cortical damage as arising from systems that are peripheral to the mirror system.

In other, other words, it's time to be explicit about what components of the motor system are part of the mirror system. As it stands, "mirror neuron theory" is effectively untestable because any result just gets folded into the mythology: humans show "mirror" responses for pantomimed gestures (unlike macaque F5 cells)? That's because humans are more sophisticated than monkeys.

The STS responds just as selectively (more so!) to perceived actions than F5? That's because it inherits its mirror properties from the real mirror system. Passive viewing of non-action stimuli (rectangles with dots in them) activates the mirror system? That's because of motor imagery associated with the non-action stimuli. Mirror neuron theorizing is starting to remind me of one of those house-of-mirrors attractions at the county fair: Mirrors everywhere, but mostly reflecting nothing.

The STS responds just as selectively (more so!) to perceived actions than F5? That's because it inherits its mirror properties from the real mirror system. Passive viewing of non-action stimuli (rectangles with dots in them) activates the mirror system? That's because of motor imagery associated with the non-action stimuli. Mirror neuron theorizing is starting to remind me of one of those house-of-mirrors attractions at the county fair: Mirrors everywhere, but mostly reflecting nothing.But I digress... Let's focus on the two papers that seem to make the best case for action understanding deficits associated with lesions to the inferior frontal gyrus.

Saygin et al. studied the ability of aphasic patients to understand action-related pictures. Ok, not exactly action understanding (pictures are static), but let's assume that these pictures induced action percepts/concepts. Subjects viewed actions with the object of the action removed, such as a boy licking an ice cream cone, but with no ice cream cone in his hand. Subjects then had to pick the matching object out of an array of two pictured objects which included the target (ice cream cone) and a distractor. Distractors included (on different trials) semantically related items (cake), "affordance" related items (a bouquet of flowers which is held in a similar manner but not normally licked), or an unrelated item (rooster). A matched verbal version of the task was also administered in which subjects read sentence fragments ("He is licking the _____") and had to pick the correct picture, as above.

The results were quite interesting: (i) performance on the two task was completely uncorrelated (after the one outlier patient was removed), showing that action understanding is domain-specific. (ii) lesion analysis revealed that deficits on the pictorial task were correlated with lesions in the inferior frontal gyrus, whereas deficits on the linguistic task were correlated with a different distribution of lesions that was more posterior and involved the anterior temporal lobe (interesting for other reasons). So both behavioral and brain data show these two tasks dissociate, and pictorial action understanding is associated with the frontal "mirror system." The results were interpreted as support for the mirror neuron theory of action understanding.

But this is an odd theoretical position. Apparently, the ability to understand actions generally, is dependent on the sensory signals that access that action-concept information. That is, having a deficit in the ability to understand actions from action depictions, does not prevent you from accessing action-concepts via a verbal route. Put another way, damage to your mirror neuron system (the frontal lesions associated with pictorial action understanding deficits) leaves action-concepts (action understanding) intact, as evidenced by the fact that you can access them via another input route. Therefore, the representation of action meaning (the understanding part) is not in the motor representation, but is somewhere else. At most the motor system may facilitate access to the meaning of actions. But these data show that the semantics of action is NOT inherent to the motor system. Alternatively, the correlation with frontal lobe structures for this action understanding task, may have more to do with making complex inferences from static ambiguous pictures (have a look at the sample stimulus item in the paper -- the "licking boy" could just as easily be smiling real big, or have a fat lip).

Enough for now. I'll comment in the next post on the strongest evidence we've seen so far for the MN theory of action understanding. (Turns out its not so strong.)

Friday, May 16, 2008

Happy Birthday to Talking Brains

Speaking of birthdays, here's a picture of me with a pretty creative birthday cake given to me by my lab a few years ago.

Wednesday, May 14, 2008

Spoken Word Memory Traces within the Human Auditory Cortex

This looks like an interesting study using fMRI repetition suppression methods to identify neural networks involved in spoken word recognition. It appears such effects were found bilaterally in the superior temporal gyrus. Worth a close look!

Spoken Word Memory Traces within the Human Auditory Cortex Revealed by Repetition Priming and Functional Magnetic Resonance Imaging

Pierre Gagnepain, Gael Chetelat, Brigitte Landeau, Jacques Dayan, Francis Eustache, and Karine Lebreton

J. Neurosci. 2008;28 5281-5289

Spoken Word Memory Traces within the Human Auditory Cortex Revealed by Repetition Priming and Functional Magnetic Resonance Imaging

Pierre Gagnepain, Gael Chetelat, Brigitte Landeau, Jacques Dayan, Francis Eustache, and Karine Lebreton

J. Neurosci. 2008;28 5281-5289

Tuesday, May 13, 2008

Mirror Neuron Course: Reading set #5 - Apraxia and gesture comprehension

So far we have not found any evidence to support the claim that the mirror neuron system is the "basis" of action understanding. In fact, all of the evidence discussed so far, refutes this claim. Let's see if we can find some evidence in the apraxia literature. Apraxic patients should have a deficit in comprehending gestures, and the underlying lesions should implicate the mirror system. Scanning some of the abstracts of the reading list below suggests the evidence is mixed.

Pazzaglia M, Smania N, Corato E, Aglioti SM.

Neural underpinnings of gesture discrimination in patients with limb apraxia.

J Neurosci. 2008 Mar 19;28(12):3030-41.

PMID: 18354006 [PubMed - indexed for MEDLINE]

Halsband U, Schmitt J, Weyers M, Binkofski F, Grützner G, Freund HJ.

Recognition and imitation of pantomimed motor acts after unilateral parietal and premotor lesions: a perspective on apraxia.

Neuropsychologia. 2001;39(2):200-16.

PMID: 11163376 [PubMed - indexed for MEDLINE]

Buxbaum LJ, Kyle K, Grossman M, Coslett HB.

Left inferior parietal representations for skilled hand-object interactions: evidence from stroke and corticobasal degeneration.

Cortex. 2007 Apr;43(3):411-23.

PMID: 17533764 [PubMed - indexed for MEDLINE]

Saygin AP, Wilson SM, Dronkers NF, Bates E.

Action comprehension in aphasia: linguistic and non-linguistic deficits and their lesion correlates.

Neuropsychologia. 2004;42(13):1788-804.

PMID: 15351628 [PubMed - indexed for MEDLINE]

Tessari A, Canessa N, Ukmar M, Rumiati RI.

Neuropsychological evidence for a strategic control of multiple routes in imitation.

Brain. 2007 Apr;130(Pt 4):1111-26. Epub 2007 Feb 9.

PMID: 17293356 [PubMed - indexed for MEDLINE]

Pazzaglia M, Smania N, Corato E, Aglioti SM.

Neural underpinnings of gesture discrimination in patients with limb apraxia.

J Neurosci. 2008 Mar 19;28(12):3030-41.

PMID: 18354006 [PubMed - indexed for MEDLINE]

Halsband U, Schmitt J, Weyers M, Binkofski F, Grützner G, Freund HJ.

Recognition and imitation of pantomimed motor acts after unilateral parietal and premotor lesions: a perspective on apraxia.

Neuropsychologia. 2001;39(2):200-16.

PMID: 11163376 [PubMed - indexed for MEDLINE]

Buxbaum LJ, Kyle K, Grossman M, Coslett HB.

Left inferior parietal representations for skilled hand-object interactions: evidence from stroke and corticobasal degeneration.

Cortex. 2007 Apr;43(3):411-23.

PMID: 17533764 [PubMed - indexed for MEDLINE]

Saygin AP, Wilson SM, Dronkers NF, Bates E.

Action comprehension in aphasia: linguistic and non-linguistic deficits and their lesion correlates.

Neuropsychologia. 2004;42(13):1788-804.

PMID: 15351628 [PubMed - indexed for MEDLINE]

Tessari A, Canessa N, Ukmar M, Rumiati RI.

Neuropsychological evidence for a strategic control of multiple routes in imitation.

Brain. 2007 Apr;130(Pt 4):1111-26. Epub 2007 Feb 9.

PMID: 17293356 [PubMed - indexed for MEDLINE]

Scientific Review Officer positions at NIH Center for Scientific Review

The Center for Scientific Review (CSR) currently has many openings for Scientific Review Officers (SRO) positions covering all areas of expertise. This is an exciting job and supports the vital mission of NIH to support and conduct medical and behavioral research. The work of the SROs is highly valued by NIH and the scientific community because the single most important factor in determining whether a grant application is funded by NIH is how well it fares in peer review.

The following is a link to the current vacancy announcement: This link outlines the job description as well as the expected qualifications of a successful candidate. The candidate is expected to have significant research experience as an independent investigator beyond thepostdoctoral level. The job number is CSR08-264838-CR-DE and may be located at http://tinyurl.com/457pjc.

Monday, May 12, 2008

Is premotor cortex essential in speech perception?

Yes, according to Meister et al. (2007, The essential role of premotor cortex in speech perception. Current Biology, 17:1692-6). I would suggest that it depends on what you mean by "essential."

This is a great paper. I like the experiment, I like the data, and I like almost all of the conclusions.

The Experiment

TMS is applied to regions in the left prefrontal and left superior temporal areas determined by peak activations in a previous fMRI study using a speech listening task. Here's where they stimulated:

During TMS the subject is asked to discriminate voiceless stop consonants in single syllables presented in noise (i.e., the task is relatively hard), or in a control condition, discriminate colors, or in another control, discriminate tones. All tasks are matched for difficulty. I'm not a fan of discrimination tasks for assessing speech networks, and it appears that to get an effect, the authors had to make the task more difficult by adding noise, but let's not worry about this here.

Performance is assessed in baseline and under TMS.

The Data

Here's what they found:

TMS to premotor cortex disrupted speech discrimination relative to baseline. Stimulation to STG did not disrupt speech discrimination. TMS anywhere did not disrupt color discrimination.

Two things to note about these effects. 1. The effect is small. Performance went from 78.9% correct in baseline to 70.6% correct during TMS -- a drop of 8 percentage points. Not a whopping effect, but reliable, and who knows what kind of disruption TMS actually achieves... 2. The STG stimulation site (see fig above) looks pretty dorsal. I suspect they might have seen a mild effect if they had targeted STS.

The tone control was run on only a subset of subjects: Baseline accuracy: 85.5%. premotor stimulation: 80.7% (not significant). STG stimulation: 79.1% (significant decrement). Looks like the premotor stimulation just missed significance (no p-value reported). So I suspect that if they had run a full set of subjects, premotor stimulation would have interfered with tone discrimination as well, which is an interesting result.

The Conclusions

1. Conclusion #1 is that TMS disrupts speech discrimination. Yes, I agree. So do frontal lesions, so this isn't exactly news, but it's nice to know that a blob of fMRI activation is actually doing something.

2. The role of the premotor site in speech perception may be that "premotor cortex generates forward models... that are compared within the superior temporal cortex with the results from initial acoustic-speech analysis .... Premotor cortex provides top-down information that facilitates speech perception in circumstances such as when the acoustic signal is degraded...." p. 1694. I LOVE it! Yes, exactly! Premotor cortex is NOT the seat of speech perception, but can facilitate the work of systems in the superior temporal lobe.

3. Regarding the lack of an effect of STG stimulation on speech perception, they suggest that this may result from bilateral organization of perceptual speech processes. Bingo! (But it also could be that they missed the critical spot.)

4. "... sensory regions are not sufficient alone for human perception." p. 1695. Depends on what you mean by human perception. If "human perception" refers to those 8 percentage points during discrimination of voiceless stop consonants presented in noise, then yes, I agree.

5. "... the perceptual representations of speech sounds, and perhaps sensory stimuli more general, are fundamentally sensory motor in nature." Damn. And they were on such a roll! :-) I disagree with the "representations" part. Change that word to "processing" and I'm OK with the statement -- well, better change "are fundamentally" to "can be in part," while we're at it. There's no evidence that the representation of speech sounds have a motor component. There's only evidence that the representations can be mildly affected by motor factors. For now, until we have some evidence, let's leave speech sound representations in the temporal lobe.

This is a great paper. I like the experiment, I like the data, and I like almost all of the conclusions.

The Experiment

TMS is applied to regions in the left prefrontal and left superior temporal areas determined by peak activations in a previous fMRI study using a speech listening task. Here's where they stimulated:

During TMS the subject is asked to discriminate voiceless stop consonants in single syllables presented in noise (i.e., the task is relatively hard), or in a control condition, discriminate colors, or in another control, discriminate tones. All tasks are matched for difficulty. I'm not a fan of discrimination tasks for assessing speech networks, and it appears that to get an effect, the authors had to make the task more difficult by adding noise, but let's not worry about this here.

Performance is assessed in baseline and under TMS.

The Data

Here's what they found:

TMS to premotor cortex disrupted speech discrimination relative to baseline. Stimulation to STG did not disrupt speech discrimination. TMS anywhere did not disrupt color discrimination.

Two things to note about these effects. 1. The effect is small. Performance went from 78.9% correct in baseline to 70.6% correct during TMS -- a drop of 8 percentage points. Not a whopping effect, but reliable, and who knows what kind of disruption TMS actually achieves... 2. The STG stimulation site (see fig above) looks pretty dorsal. I suspect they might have seen a mild effect if they had targeted STS.

The tone control was run on only a subset of subjects: Baseline accuracy: 85.5%. premotor stimulation: 80.7% (not significant). STG stimulation: 79.1% (significant decrement). Looks like the premotor stimulation just missed significance (no p-value reported). So I suspect that if they had run a full set of subjects, premotor stimulation would have interfered with tone discrimination as well, which is an interesting result.

The Conclusions

1. Conclusion #1 is that TMS disrupts speech discrimination. Yes, I agree. So do frontal lesions, so this isn't exactly news, but it's nice to know that a blob of fMRI activation is actually doing something.

2. The role of the premotor site in speech perception may be that "premotor cortex generates forward models... that are compared within the superior temporal cortex with the results from initial acoustic-speech analysis .... Premotor cortex provides top-down information that facilitates speech perception in circumstances such as when the acoustic signal is degraded...." p. 1694. I LOVE it! Yes, exactly! Premotor cortex is NOT the seat of speech perception, but can facilitate the work of systems in the superior temporal lobe.

3. Regarding the lack of an effect of STG stimulation on speech perception, they suggest that this may result from bilateral organization of perceptual speech processes. Bingo! (But it also could be that they missed the critical spot.)

4. "... sensory regions are not sufficient alone for human perception." p. 1695. Depends on what you mean by human perception. If "human perception" refers to those 8 percentage points during discrimination of voiceless stop consonants presented in noise, then yes, I agree.

5. "... the perceptual representations of speech sounds, and perhaps sensory stimuli more general, are fundamentally sensory motor in nature." Damn. And they were on such a roll! :-) I disagree with the "representations" part. Change that word to "processing" and I'm OK with the statement -- well, better change "are fundamentally" to "can be in part," while we're at it. There's no evidence that the representation of speech sounds have a motor component. There's only evidence that the representations can be mildly affected by motor factors. For now, until we have some evidence, let's leave speech sound representations in the temporal lobe.

Iacoboni et al. 1999 follow up

What is driving the perceptual response to non-action stimuli in premotor cortex? (see last post for the context of this question.) Iacoboni et al. write, "During all scans the participants knew that the task was either to move a finger or to refrain from moving it. Thus mental imagery of their finger (or of the finger movement) should have been present even during simple observation." p. 2526-2527. In other words, finger movements, which activate prefrontal cortex, are associated not only with actions, but in these subjects also with non-action stimuli and so simply presenting the stimuli becomes sufficient to elicit motor imagery and therefore activate premotor cortex. A very reasonable explanation; I fully agree. But this raises a question: if any old stimulus can elicit activity in the mirror system simply as a result of association, why can't we explain action-perception responses the same way? On this view, the only reason why there is more activation for action via imitation compared with action cued by rectangles with symbols on them (the paper's main result) is because the association is stronger in the former condition.

I actually like this paper. I even agree with the main conclusion that the circuit highlighted by the study supports imitation (it's mostly the action understanding part of MNs that I think is seriously flawed). I just think that it might be useful to think of imitation as part of a broader system involved in sensory-motor integration that, as a network, isn't restricted to actions.

I actually like this paper. I even agree with the main conclusion that the circuit highlighted by the study supports imitation (it's mostly the action understanding part of MNs that I think is seriously flawed). I just think that it might be useful to think of imitation as part of a broader system involved in sensory-motor integration that, as a network, isn't restricted to actions.

Friday, May 9, 2008

The human mirror system is not selective for action perception: Evidence from Iacoboni et al. 1999

Iacoboni et al. (1999, Cortical mechanisms of human imitation, Science, 286:2526-8) can probably be considered a "classic" paper in the mirror neuron literature. Certainly, it has been highly influential, being cited over 700 times so far. The paper is a good one, and demonstrates quite clearly that the perception and execution of action both activate the left inferior frontal cortex.

Subjects executed finger movements under three perceptual conditions which cued the movement: 1. viewing finger movement that the subject was to imitate, 2. viewing a static hand with a symbol on one finger indicating which movement to perform, and 3. viewing a gray rectangle with a dot in a spatial position that cued the target finger movement. These three perceptual conditions were also presented in an observe-only condition.

The main result is that imitating viewed actions results in more activation in the left inferior frontal gyrus (a portion of Broca's area) than making those same movements when cued by non-actions. You can see this in the time course data above: the three left most peaks of activation correspond, respectively, to the action imitation condition (largest peak), the grey rectangle with dots condition, and the symbols on static hands condition. Nice result.

What's also interesting, though, is the pattern of activation for the observe-only condition which is shown in the right half of the time course. Again, the three peaks correspond to the three conditions, moving hand, rectangle, static symbol hand. There's no difference in the magnitude of activation across these three conditions! What business does a grey rectangle with dots on it have activating the action understanding/imitation system?! And just as robustly as viewing actions themselves! Is Broca's area the neural basis of grey-rectangle-with-dots-on-it understanding? Or grey-rectangle imitation?

Homework question: What's driving this non-action response?

Wednesday, May 7, 2008

Mirror Neuron Course: Reading Set #4

We plan to look at some human mirror system work for next week's meeting:

Grèzes J, Armony JL, Rowe J, Passingham RE.

Activations related to "mirror" and "canonical" neurones in the human brain: an fMRI study.

Neuroimage. 2003 Apr;18(4):928-37.

Iacoboni M, Woods RP, Brass M, Bekkering H, Mazziotta JC, Rizzolatti G.

Cortical mechanisms of human imitation.

Science. 1999 Dec 24;286(5449):2526-8.

Iacoboni M.

The role of premotor cortex in speech perception: Evidence from fMRI and rTMS.

J Physiol Paris. 2008 Mar 18. [Epub ahead of print]

Meister IG, Wilson SM, Deblieck C, Wu AD, Iacoboni M.

The essential role of premotor cortex in speech perception.

Curr Biol. 2007 Oct 9;17(19):1692-6. Epub 2007 Sep 27

Grèzes J, Armony JL, Rowe J, Passingham RE.

Activations related to "mirror" and "canonical" neurones in the human brain: an fMRI study.

Neuroimage. 2003 Apr;18(4):928-37.

Iacoboni M, Woods RP, Brass M, Bekkering H, Mazziotta JC, Rizzolatti G.

Cortical mechanisms of human imitation.

Science. 1999 Dec 24;286(5449):2526-8.

Iacoboni M.

The role of premotor cortex in speech perception: Evidence from fMRI and rTMS.

J Physiol Paris. 2008 Mar 18. [Epub ahead of print]

Meister IG, Wilson SM, Deblieck C, Wu AD, Iacoboni M.

The essential role of premotor cortex in speech perception.

Curr Biol. 2007 Oct 9;17(19):1692-6. Epub 2007 Sep 27

Tuesday, May 6, 2008

A proof that mirror neurons are not the basis of action understanding?

A couple of comments on the Gallese et al. 1996 paper (Brain, 119:593-609) and Rizzolatti, Fogassi, & Gallese 2001 review paper (Nature Reviews Neuroscience, 2: 661-670.

The Gallese et al. paper is an empirical report, and as such, a good one. Have a look if you want to know about the functional properties of mirror neurons. One interesting tidbit: five different hand actions were assessed, grasping, placing, manipulating, hand interaction, and holding. Most cells, by far, are partial to grasping. Of 92 cells sampled, 30 were selective for grasping (next highest selective cell count was placing cells & manipulating cells, 7 cells each), and 39 responded to grasping plus some other action. So grasping is represented in 75% of sampled cells. This probably just means monkeys grasp a lot, but maybe it's worth noting.

There is some commentary in the discussion section that's worth examining because a clear definition of action understanding is provided. "By this term [understanding], we mean the capacity to recognize that an individual is performing an action, to differentiate this action from others analogous to it, and to use this information in order to act appropriately" (p. 606).

This is a strong position. If we flip the statement around, it implies that without MNs the animal cannot "recognize that an individual is performing an action" or "differentiate this action from others analogous to it" or "use this information in order to act appropriately".

This view seems to rule out the possibility of observational learning. Now, I'm way outside my area of expertise, so I'm going to resist stepping too far out on a limb. I am aware however, that observational learning occurs in many species. E.g., I saw a TV science show once where an octopus learned to get into a jar by watching another octopus open the jar. (I TOLD you I was outside my area of expertise!) Anyway, the point is, making a claim that rules out observational learning seems to a lay person like me, the wrong theoretical move to make (see below).

We looked that Rizzolatti, Fogassi, & Gallese (RFG) review paper because it was continually cited in the Rizzolatti & Craighero paper after statements regarding other mechanisms for action understanding. This is important, because if there is another way to break into the system, you might get out of some of the circularity problems we noted previously with respect to imitation and action understanding, as well as this related problem with observational learning. I was hoping we'd get a bit of clarification on the question of these other mechanisms, and we did.

RFG are admirably strong in their claims regarding the importance of MNs in action understanding. They're certainly not hedging any claims: "We understand action because the motor representation of that action is activated in our brain" (p. 661).

So what about those pesky STS cells that also respond to the perception of action? Well, RFG provide a nice summary of these cells. STS cells respond not only to simple actions, but also appear to combine action-related bits of information. For example, the firing of some action responsive cells in STS is modulated by eye gaze information: the cell fires only if the actor is looking at the target of the action, not if the actor is looking away.

RFG summarize, "The properties of these neurons show that the visual analysis of action reaches a surprising level of complexity in the STSa" (p. 666). A level that seems to be much more sophisticated than that found in F5, I would interject. RFG continue, "But the existence of these neurons and, more generally, of neurons that bind different types of visual features of an observed action, is not a sufficient condition for action understanding per se" (p. 666). I completely agree. You can't make logically necessary inferences about function on the basis correlated neural activity. But the same is true of mirror neurons.

I think, though, that the point RFG are trying to make here is that unlike in pure sensory perception (in their view), the "semantics" of an action is somehow inherent in the action itself. Again, evidence from aphasia leads me to question this position. How is it that there are patients who can perfectly reproduce an action (repeat speech verbatim) yet fail to understand the meaning? But let's move on.

So how does STS acquire these complex response properties? To the best I can tell, RFG hold that the motor system endows the STS with its action perception abilities.

"We argue that the sensory binding of different actions found in the STSa is derived from the development of motor synergistic actions. Efferent copies of these actions activate specific sensory targets for a better control of action. Subsequently, this association is used in understanding the actions of others" (p. 666).

So it sounds like the STS action recognition system fully inherits its action recognition/understanding abilities from the motor system. How it inherits "a surprising level of complexity" from a much simpler system is not clear. But RFG's position is clear: action understanding is a function of the motor system. Action understanding can be achieved via other systems, such as the STS, but this is only because the motor system has endowed such systems with its action understanding ability via previous association.

Correct me if I'm wrong, but from this collection of assumptions, namely, >

1. "We understand action because the motor representation of that action is activated in our brain." RFG p. 661.

2. "We cannot claim that this is the only mechanism through which actions done by others may be understood." RC p. 172.

BUT,

3. These other mechanism acquire their action understanding properties via associations with the motor system. (My paraphrase of above quotes from RFG p. 666.)

it follows that an action cannot be understood unless it has already been executed by the perceiver. From this it follows that observational learning should be impossible in creatures that use their mirror neurons to understand actions. I don't know about monkeys, but I believe (without knowledge of the literature) that humans are good at observational learning.

If all of these assumptions are correct, we've just proven that the mirror neuron theory of action understanding is wrong.

So let's discuss the problems with my assumptions. Anyone have any ideas?

The Gallese et al. paper is an empirical report, and as such, a good one. Have a look if you want to know about the functional properties of mirror neurons. One interesting tidbit: five different hand actions were assessed, grasping, placing, manipulating, hand interaction, and holding. Most cells, by far, are partial to grasping. Of 92 cells sampled, 30 were selective for grasping (next highest selective cell count was placing cells & manipulating cells, 7 cells each), and 39 responded to grasping plus some other action. So grasping is represented in 75% of sampled cells. This probably just means monkeys grasp a lot, but maybe it's worth noting.

There is some commentary in the discussion section that's worth examining because a clear definition of action understanding is provided. "By this term [understanding], we mean the capacity to recognize that an individual is performing an action, to differentiate this action from others analogous to it, and to use this information in order to act appropriately" (p. 606).

This is a strong position. If we flip the statement around, it implies that without MNs the animal cannot "recognize that an individual is performing an action" or "differentiate this action from others analogous to it" or "use this information in order to act appropriately".

This view seems to rule out the possibility of observational learning. Now, I'm way outside my area of expertise, so I'm going to resist stepping too far out on a limb. I am aware however, that observational learning occurs in many species. E.g., I saw a TV science show once where an octopus learned to get into a jar by watching another octopus open the jar. (I TOLD you I was outside my area of expertise!) Anyway, the point is, making a claim that rules out observational learning seems to a lay person like me, the wrong theoretical move to make (see below).

We looked that Rizzolatti, Fogassi, & Gallese (RFG) review paper because it was continually cited in the Rizzolatti & Craighero paper after statements regarding other mechanisms for action understanding. This is important, because if there is another way to break into the system, you might get out of some of the circularity problems we noted previously with respect to imitation and action understanding, as well as this related problem with observational learning. I was hoping we'd get a bit of clarification on the question of these other mechanisms, and we did.

RFG are admirably strong in their claims regarding the importance of MNs in action understanding. They're certainly not hedging any claims: "We understand action because the motor representation of that action is activated in our brain" (p. 661).

So what about those pesky STS cells that also respond to the perception of action? Well, RFG provide a nice summary of these cells. STS cells respond not only to simple actions, but also appear to combine action-related bits of information. For example, the firing of some action responsive cells in STS is modulated by eye gaze information: the cell fires only if the actor is looking at the target of the action, not if the actor is looking away.

RFG summarize, "The properties of these neurons show that the visual analysis of action reaches a surprising level of complexity in the STSa" (p. 666). A level that seems to be much more sophisticated than that found in F5, I would interject. RFG continue, "But the existence of these neurons and, more generally, of neurons that bind different types of visual features of an observed action, is not a sufficient condition for action understanding per se" (p. 666). I completely agree. You can't make logically necessary inferences about function on the basis correlated neural activity. But the same is true of mirror neurons.

I think, though, that the point RFG are trying to make here is that unlike in pure sensory perception (in their view), the "semantics" of an action is somehow inherent in the action itself. Again, evidence from aphasia leads me to question this position. How is it that there are patients who can perfectly reproduce an action (repeat speech verbatim) yet fail to understand the meaning? But let's move on.

So how does STS acquire these complex response properties? To the best I can tell, RFG hold that the motor system endows the STS with its action perception abilities.

"We argue that the sensory binding of different actions found in the STSa is derived from the development of motor synergistic actions. Efferent copies of these actions activate specific sensory targets for a better control of action. Subsequently, this association is used in understanding the actions of others" (p. 666).

So it sounds like the STS action recognition system fully inherits its action recognition/understanding abilities from the motor system. How it inherits "a surprising level of complexity" from a much simpler system is not clear. But RFG's position is clear: action understanding is a function of the motor system. Action understanding can be achieved via other systems, such as the STS, but this is only because the motor system has endowed such systems with its action understanding ability via previous association.

Correct me if I'm wrong, but from this collection of assumptions, namely, >

1. "We understand action because the motor representation of that action is activated in our brain." RFG p. 661.

2. "We cannot claim that this is the only mechanism through which actions done by others may be understood." RC p. 172.

BUT,

3. These other mechanism acquire their action understanding properties via associations with the motor system. (My paraphrase of above quotes from RFG p. 666.)

it follows that an action cannot be understood unless it has already been executed by the perceiver. From this it follows that observational learning should be impossible in creatures that use their mirror neurons to understand actions. I don't know about monkeys, but I believe (without knowledge of the literature) that humans are good at observational learning.

If all of these assumptions are correct, we've just proven that the mirror neuron theory of action understanding is wrong.

So let's discuss the problems with my assumptions. Anyone have any ideas?

Friday, May 2, 2008

Rizzolatti & Craighero (2004): Class discussion summary #6 (relation between hand and mouth)

There's an interesting discussion towards the end of RC's paper that covers evidence for links between hand and mouth gestures. This is relevant to the speculations on the mirror systems role in the evolution of speech. Links between hand and mouth are taken as evidence of a common neural foundation, and therefore a mechanism to transfer functions from one system (grasping) to the other (speech).

The interesting observation is that the act of grasping objects influences orofacial gestures. Gentilucci et al. (2001, J. Neurophysiol. 86:1685-99) showed that lip aperture during the articulation of syllable was larger if subjects were concurrently reaching for a larger compared to smaller object. Interesting. Similar evidence from other studies was discussed as well. But why the link. Drew Headley, a grad student in Norm Weinberger's lab here at UCI who is taking the "live" MN course, suggested a simple explanation: eating. Hand and mouth movements are associatively linked because we generally use our hands to stuff our mouths! This may even explain correlations in the size of the gesture. When we pop a grape in our mouths we have a smallish grip on the food and a smallish lip aperture to allow the grape in, whereas when we take a bite of an apple both our grip and our lip aperture are larger. So maybe the association between hand and mouth isn't necessarily related to a common, evolution-linked neural substrate. Maybe it's just because we eat with our hands.

Later on the paper a study is discussed in another context, where TMS-induced MEPs were recorded on the lip and hand (Watkins et al, 2003, Neuropsychologia, 41:989-94). Listening to speech enhanced the MEPs on the lip, but not the hand. This was used as evidence for the existence of an "echo-neuron" system. But it also demonstrates a clear dissociation between the hand and mouth using a now standard probe of mirror neuron activity (TMS-induced MEPs).

In short, it seems there is plenty of room for skepticism regarding the idea that "Hand/arm and speech gestures must be strictly linked and must, at least in part, share a common neural substrate" (p. 184).

Thursday, May 1, 2008

Rizzolatti & Craighero (2004): Class discussion summary #5

Yet another post on RC's much cited paper -- 517 citations so far, according to Google Scholar.

Mirror Neurons in Humans

As we all know, direct evidence for the existence of MNs in humans is completely lacking because you can't record from single cells in humans all that readily. But let's not get hung up on this technicality, and grant that such cells probably exist. So assuming this, RC point out that human MNs are different from monkey MNs, in two ways:

1. The human mirror system does not require the presence of an object-directed action. It will respond to meaningless intransitive gestures.

2. The human mirror system, in addition to its assumed function in action understanding, also supports imitation.

I have no problem with the idea that a system might change from one species to the next, so I don't object to the idea that the mirror system might be different in humans. But it does mean that important properties found in MNs in monkeys, such as the fact that they don't seem to be involved in boring old movement preparation, can't automatically be generalized to humans. You've got to do the experiments.

Another general point worth making is that when we think in terms of evolution, we have to remember that human mirror neurons did not evolve from macaque mirror neurons. Rather the behavior of human mirror neurons (assuming they exist) and macaque mirror neurons evolved from neurons of some type that existed in our most recent common ancestor, which lived about 25 million years ago. It is therefore a mistake to think of the human "mirror system" as evolving from macaque mirror neurons.

Where is the human mirror system? According to RC, the "core" of it is "the rostral art of the inferior parietal lobule and the lower part of the precentral gyrus plus the posterior part of the inferior frontal gyrus" (p. 176). This is based on a number of studies cited on page 176. A good discussion of anatomy follows on the next page or so. Worth reading for sure.

On page 179, RC discuss an interesting fMRI study by Buccino et al. (2004, JoCN, 16-1-14). Subjects watched video clips of silent mouth actions performed by humans, monkeys, and dogs. There were two types of actions: biting, and oral communicative gestures (speech, lip smacking, and barking for human, monkey, and dogs, respectively). Biting activated the inferior parietal lobule, pars opercularis and precentral gyrus independent of species (some slight hemispheric asymmetries were found though). Communicative gestures yielded a different pattern: Speech and lip smacking activated the pars opercularis, whereas barking "did not produce any frontal lobe activation" (p. 179).

You know what I'm going to say. Presumably, subjects in this study understood the barking actions. Yet this was achieved without the activation of the mirror system. Mirror neurons, therefore, are not necessary for action understanding.

RC have a different spin. "Actions belonging to the motor repertoire of the observer are mapped on his/her motor system. Actions that do not belong to this repertoire do not excite the motor system of the observer and appear to be recognized essentially on a visual basis without motor involvement. It is likely that these two different ways of recognizing actions have two different psychological counterparts. In the first case the motor 'resonance' translates the visual experience into an internal 'personal knowledge'... whereas this lacking in the second case" (p. 179).

Ok, so following this reasoning, mirror neurons are not the basis of action understanding, because that can be achieved "on a visual basis without motor involvement", but instead are the basis of "internal knowledge" of an action. In other words, they are the neural correlate of the psychological experience, "hey, I can do that too!" I wonder if mirror neurons would be as popular as they are if their discoverers claimed that they were the neural basis of "internal knowledge" of perceived actions, rather than the neural basis of action understanding.

Notice now, that we have a double-dissociation between action understanding and mirror system activity in humans. The mirror system can be activated during the perception of actions that are meaningless (and therefore don't lead to understanding), and action understanding can be achieved without activation of the mirror system (viewing a barking dog).

Incidentally, the same double-dissociation has long been documented in the aphasia literature, and in a more forceful fashion. Severe Broca's aphasics can understand speech in the absence of a frontal mirror system, whereas patients with transcortical sensory aphasia (or better yet, the syndrome reported by Geschwind as "isolation of the speech area") can imitate speech quite well indicating an intact mirror system, but without the ability to comprehend it.

Mirror Neurons in Humans

As we all know, direct evidence for the existence of MNs in humans is completely lacking because you can't record from single cells in humans all that readily. But let's not get hung up on this technicality, and grant that such cells probably exist. So assuming this, RC point out that human MNs are different from monkey MNs, in two ways:

1. The human mirror system does not require the presence of an object-directed action. It will respond to meaningless intransitive gestures.

2. The human mirror system, in addition to its assumed function in action understanding, also supports imitation.

I have no problem with the idea that a system might change from one species to the next, so I don't object to the idea that the mirror system might be different in humans. But it does mean that important properties found in MNs in monkeys, such as the fact that they don't seem to be involved in boring old movement preparation, can't automatically be generalized to humans. You've got to do the experiments.

Another general point worth making is that when we think in terms of evolution, we have to remember that human mirror neurons did not evolve from macaque mirror neurons. Rather the behavior of human mirror neurons (assuming they exist) and macaque mirror neurons evolved from neurons of some type that existed in our most recent common ancestor, which lived about 25 million years ago. It is therefore a mistake to think of the human "mirror system" as evolving from macaque mirror neurons.

Where is the human mirror system? According to RC, the "core" of it is "the rostral art of the inferior parietal lobule and the lower part of the precentral gyrus plus the posterior part of the inferior frontal gyrus" (p. 176). This is based on a number of studies cited on page 176. A good discussion of anatomy follows on the next page or so. Worth reading for sure.

On page 179, RC discuss an interesting fMRI study by Buccino et al. (2004, JoCN, 16-1-14). Subjects watched video clips of silent mouth actions performed by humans, monkeys, and dogs. There were two types of actions: biting, and oral communicative gestures (speech, lip smacking, and barking for human, monkey, and dogs, respectively). Biting activated the inferior parietal lobule, pars opercularis and precentral gyrus independent of species (some slight hemispheric asymmetries were found though). Communicative gestures yielded a different pattern: Speech and lip smacking activated the pars opercularis, whereas barking "did not produce any frontal lobe activation" (p. 179).

You know what I'm going to say. Presumably, subjects in this study understood the barking actions. Yet this was achieved without the activation of the mirror system. Mirror neurons, therefore, are not necessary for action understanding.

RC have a different spin. "Actions belonging to the motor repertoire of the observer are mapped on his/her motor system. Actions that do not belong to this repertoire do not excite the motor system of the observer and appear to be recognized essentially on a visual basis without motor involvement. It is likely that these two different ways of recognizing actions have two different psychological counterparts. In the first case the motor 'resonance' translates the visual experience into an internal 'personal knowledge'... whereas this lacking in the second case" (p. 179).

Ok, so following this reasoning, mirror neurons are not the basis of action understanding, because that can be achieved "on a visual basis without motor involvement", but instead are the basis of "internal knowledge" of an action. In other words, they are the neural correlate of the psychological experience, "hey, I can do that too!" I wonder if mirror neurons would be as popular as they are if their discoverers claimed that they were the neural basis of "internal knowledge" of perceived actions, rather than the neural basis of action understanding.

Notice now, that we have a double-dissociation between action understanding and mirror system activity in humans. The mirror system can be activated during the perception of actions that are meaningless (and therefore don't lead to understanding), and action understanding can be achieved without activation of the mirror system (viewing a barking dog).