News and views on the neural organization of language moderated by Greg Hickok and David Poeppel

Saturday, February 28, 2009

If we held an auditory/speech/language neuroscience conference, would anybody come?

A while back I asked which is the best/preferred conference for speech/language neuroscience. I think I got about 2 responses. Maybe this means there isn't an ideal venue. So David and I have been talking about developing one. Would anybody come? Let us know what you think by commenting on this post and/or responding to the new survey.

Thursday, February 26, 2009

The Great Voodoo Hunt: A reminder that it's OK to plot your fMRI data

I can see the courtroom-style drama being played out in every colloquium, poster session, job interview, and platform presentation involving fMRI research (not to mention reviews!)...

No doubt you are aware of Ed Vul's voodoo correlations paper by now. It is a useful paper in that it reminds us to keep our analyses independent. Nothing new statistically, of course, but it never hurts to be reminded. I decided I really didn't want to spend much time on the topic here on Talking Brains because it is getting plenty of attention without our help. But then someone point out this to me from Ed's chapter with Nancy Kanwisher:

One might take from this that we're not supposed to (allowed to) plot data from an ROI, else the VOODOO police will hunt us down. But it would be a huge mistake not to look and not to publish these graphs. By looking at the graphs you can distinguish between differences that result from different levels of positive activation in the various conditions, different degrees of negative activation (signal decrease relative to baseline), or to one condition going positive and another going negative. These different patterns may suggest different theoretical interpretations. It could also suggest other differences such as the latency of the response that would go undetected in activation maps alone. If all we looked at were contrast maps, without examining amplitude or timecourse plots, we could miss out on important information. Just be aware that when you look at the magnitude of the amplitude differences, it could be slightly biased.

To be clear, Vul and Kanwisher will not prick their voodoo dolls for any researcher who wants to publish graphs from an ROI. In fact, they are quick to note the value of examining and publishing such graphs, as long as they are used to explore activation patterns that are orthogonal to the selection criteria:

Given the extensive buzz about all this voodoo, I sincerely hope that things don't degenerate into a witch hunt. Ed and colleagues have rightfully reminded us to be careful in our statistical treatment of fMRI data. But let's be equally careful not turn into mindless persecutors.

P.s., in case your appetite for voodoo commentary is yet to be satiated check out Brad Buchsbaum's recent critiques.

Accuser: [Sternly] Have you ever run a statistical test on data from an ROI that was defined by the same data?

fMRI Researcher: [Eyes twitch nervously] No sir.

Accuser: Have you ever run a correlation between an ROI and behavioral data using the same data that defined the ROI?

fMRI Researcher: [Wipes sweat from brow, cowers] No sir. Never.

Accuser: [In a rising crescendo] HAVE YOU EVER PLOTTED YOUR DATA FROM AN ROI?

fMRI Researcher: [Breaks down in tears] YES! YES I HAVE BUT I SWEAR I DIDN'T MEAN TO HURT ANYBODY!

Audience: [snickers, jeers, and dismisses researcher's entire body of work as -- cue dramatic music --VOODOO!]

No doubt you are aware of Ed Vul's voodoo correlations paper by now. It is a useful paper in that it reminds us to keep our analyses independent. Nothing new statistically, of course, but it never hurts to be reminded. I decided I really didn't want to spend much time on the topic here on Talking Brains because it is getting plenty of attention without our help. But then someone point out this to me from Ed's chapter with Nancy Kanwisher:

The most common, most simple, and most innocuous instance of non-independence occurs when researchers simply plot (rather than test) the signal change in a set of voxels that were selected based on that same signal change. ... In general, plotting non-independent data is misleading, because the selection criteria conflate any effects that may be present in the data from those effects that could be produced by selecting noise with particular characteristics.

One might take from this that we're not supposed to (allowed to) plot data from an ROI, else the VOODOO police will hunt us down. But it would be a huge mistake not to look and not to publish these graphs. By looking at the graphs you can distinguish between differences that result from different levels of positive activation in the various conditions, different degrees of negative activation (signal decrease relative to baseline), or to one condition going positive and another going negative. These different patterns may suggest different theoretical interpretations. It could also suggest other differences such as the latency of the response that would go undetected in activation maps alone. If all we looked at were contrast maps, without examining amplitude or timecourse plots, we could miss out on important information. Just be aware that when you look at the magnitude of the amplitude differences, it could be slightly biased.

To be clear, Vul and Kanwisher will not prick their voodoo dolls for any researcher who wants to publish graphs from an ROI. In fact, they are quick to note the value of examining and publishing such graphs, as long as they are used to explore activation patterns that are orthogonal to the selection criteria:

On the other hand, plots of non-independent data sometimes contain useful information orthogonal to the selection criteria. When data are selected for an interaction, non-independent plots of the data reveal which of many possible forms the interaction takes. In the case of selected main effects, readers may be able to compare the activations to baseline and assess selectivity. In either case, there may be valuable, independent and orthogonal information that could be gleaned from the time-courses. In short, there is often information lurking in graphs of non-independent data; however, it is usually not the information that the authors of such graphs draw readers’ attention to. Thus, we are not arguing against displaying graphs that contain redundant (and perhaps biased) information, we are arguing against the implicit use of these graphs to convince readers by use of non-independent data.

Given the extensive buzz about all this voodoo, I sincerely hope that things don't degenerate into a witch hunt. Ed and colleagues have rightfully reminded us to be careful in our statistical treatment of fMRI data. But let's be equally careful not turn into mindless persecutors.

P.s., in case your appetite for voodoo commentary is yet to be satiated check out Brad Buchsbaum's recent critiques.

Wednesday, February 25, 2009

Comments on the motor theory of speech perception and Broca's aphasia

In response to the post on my "Eight Problems" paper, Patti has raised some important issues regarding how much weight we should place on unilateral lesions in assessing the validity of the motor theory of speech perception. If you haven't read Patti's comment, I've appended it to the end of this post.

Here are a few thoughts...

1. Are unilateral lesions a strong enough test to reject the motor theory of speech perception? Well first let's be very clear about what the motor theory claims. I quote from Liberman & Mattingly (1985):

So if we could find a patient with damage to these "motor commands that call for movements of the articulators" then such a patient should not be able to perceive speech sounds. Now how do we know whether we have found such a patient? Well, it seems to me if you find someone who no longer can call up these motor commands to produce speech, then you have found the critical case. Of course, you want to make sure the deficit is not simply a peripheral disease, etc., and really affects motor planning. Severe Broca's aphasia fits the bill here, and as we all know, they can comprehend speech quite well. Therefore, the motor theory is wrong.

As far as I can tell -- please someone correct me if I'm wrong! -- the only way out of this argument against the motor theory is to point to the word intended in Liberman & Mattingly's definition:

Maybe intended gestures are coded more abstractly and are separate from the motor planning areas that are often damaged in Broca's aphasia (note, this is often most of the left lateral frontal and even parietal lobe!). I would actually agree with this assessment (note, this means that intended gestures are probably not stored in the frontal or even parietal lobe, i.e., the mirror system!). The reason I agree with this is that it admits the possibility that intended gestures are not motor in nature but sensory. That is, suppose that the goal or intention of a speech act is not a movement but a sound. The target of such a goal or intention would then be coded in auditory cortex. This is essentially the view we've been promoting: a sensory theory of speech production rather than a motor theory of speech perception.

2. Does the undeniable linkage between speech perception and speech production mean that the motor theory is correct? I agree that there is undeniable evidence that speech perception and speech production are linked. Without such a linkage, we wouldn't be able to talk. However, it puzzles me why motor theorists assume (perhaps tacitly) that the only explanation for this linkage is that speech is perceived via the motor system. Why does the linkage have to hold only in that direction, namely that action determines perception? Why can't the reverse hold, namely that perception determines action? In fact we know that the later relation has to hold for some abilities. Learning to produce the speech sounds and words of your language is a motor learning task -- you have to figure out how to move your vocal tract to make the sounds and words that you are hearing. The input to this motor learning task is auditory. Therefore, there must be a mechanism for using auditory information to inform motor speech gestures. We know further that this auditory to motor influence persists throughout life because of the effects of late onset deafness, which causes articulatory decline, and various studies on effects of altered auditory feedback on speech production. So we have plenty of justification for why sensory and motor systems are linked without invoking a motor theory. It is true that motor-related activity can influence perception, but these effects too can be explained without invoking a full blown motor theory. All one has to assume is that the motor system can prime sensory speech systems, top-down style, perhaps in the form of forward models. Now we can explain everything the motor theory does and in addition explain why Broca's aphasia doesn't destroy the ability to understand speech.

3. Does anyone believe the motor theory as it was presented 50 years ago? Well, no, but that's because the motor theory came with a lot of baggage like the modularity of the system (the "speech is special" claim). The central claim of the theory, that speech is perceived by mapping acoustic input onto motor representations is a core belief of many neuroscientists who dabble in speech. For example, here is the concluding sentence from the abstract of a forthcoming paper in Current Biology titled, "The Motor Somatotopy of Speech Perception by D'Ausilio, Pulvermuller, Salmas, Bufalari, Begliomini, & Fadiga:

So yes, people believe in the core tenet of the motor theory -- despite the fact that the theory makes no sense in light of Broca's aphasia.

Thanks much to Patti for her very important comments!

References

Alessandro D'Ausilio, Friedemann Pulvermüller, Paola Salmas, Ilaria Bufalari, Chiara Begliomini, Luciano Fadiga (2009). The Motor Somatotopy of Speech Perception Current Biology DOI: 10.1016/j.cub.2009.01.017

Liberman, A.M., and Mattingly, I.G. (1985). The motor theory of speech perception revised. Cognition 21, 1-36.

*********************

Here are a few thoughts...

1. Are unilateral lesions a strong enough test to reject the motor theory of speech perception? Well first let's be very clear about what the motor theory claims. I quote from Liberman & Mattingly (1985):

the objects of speech perception are the intended phonetic gestures of the speaker, represented in the brain as invariant motor commands that call for movements of the articulators through certain linguistically significant configurations. These gestural commands are the physical reality underlying the traditional phonetic notions-for example, ‘tongue backing, ’ ‘lip rounding,’ and ‘jaw raising’-that provide the basis for phonetic categories. They are the elementary events of speech production and perception.” (p. 2)

So if we could find a patient with damage to these "motor commands that call for movements of the articulators" then such a patient should not be able to perceive speech sounds. Now how do we know whether we have found such a patient? Well, it seems to me if you find someone who no longer can call up these motor commands to produce speech, then you have found the critical case. Of course, you want to make sure the deficit is not simply a peripheral disease, etc., and really affects motor planning. Severe Broca's aphasia fits the bill here, and as we all know, they can comprehend speech quite well. Therefore, the motor theory is wrong.

As far as I can tell -- please someone correct me if I'm wrong! -- the only way out of this argument against the motor theory is to point to the word intended in Liberman & Mattingly's definition:

the objects of speech perception are the intended phonetic gestures of the speaker [italics added]

Maybe intended gestures are coded more abstractly and are separate from the motor planning areas that are often damaged in Broca's aphasia (note, this is often most of the left lateral frontal and even parietal lobe!). I would actually agree with this assessment (note, this means that intended gestures are probably not stored in the frontal or even parietal lobe, i.e., the mirror system!). The reason I agree with this is that it admits the possibility that intended gestures are not motor in nature but sensory. That is, suppose that the goal or intention of a speech act is not a movement but a sound. The target of such a goal or intention would then be coded in auditory cortex. This is essentially the view we've been promoting: a sensory theory of speech production rather than a motor theory of speech perception.

2. Does the undeniable linkage between speech perception and speech production mean that the motor theory is correct? I agree that there is undeniable evidence that speech perception and speech production are linked. Without such a linkage, we wouldn't be able to talk. However, it puzzles me why motor theorists assume (perhaps tacitly) that the only explanation for this linkage is that speech is perceived via the motor system. Why does the linkage have to hold only in that direction, namely that action determines perception? Why can't the reverse hold, namely that perception determines action? In fact we know that the later relation has to hold for some abilities. Learning to produce the speech sounds and words of your language is a motor learning task -- you have to figure out how to move your vocal tract to make the sounds and words that you are hearing. The input to this motor learning task is auditory. Therefore, there must be a mechanism for using auditory information to inform motor speech gestures. We know further that this auditory to motor influence persists throughout life because of the effects of late onset deafness, which causes articulatory decline, and various studies on effects of altered auditory feedback on speech production. So we have plenty of justification for why sensory and motor systems are linked without invoking a motor theory. It is true that motor-related activity can influence perception, but these effects too can be explained without invoking a full blown motor theory. All one has to assume is that the motor system can prime sensory speech systems, top-down style, perhaps in the form of forward models. Now we can explain everything the motor theory does and in addition explain why Broca's aphasia doesn't destroy the ability to understand speech.

3. Does anyone believe the motor theory as it was presented 50 years ago? Well, no, but that's because the motor theory came with a lot of baggage like the modularity of the system (the "speech is special" claim). The central claim of the theory, that speech is perceived by mapping acoustic input onto motor representations is a core belief of many neuroscientists who dabble in speech. For example, here is the concluding sentence from the abstract of a forthcoming paper in Current Biology titled, "The Motor Somatotopy of Speech Perception by D'Ausilio, Pulvermuller, Salmas, Bufalari, Begliomini, & Fadiga:

We discuss our results in light of a modified ‘motor theory of speech perception’ according to which speech comprehension is grounded in motor circuits not exclusively involved in speech production.

So yes, people believe in the core tenet of the motor theory -- despite the fact that the theory makes no sense in light of Broca's aphasia.

Thanks much to Patti for her very important comments!

References

Alessandro D'Ausilio, Friedemann Pulvermüller, Paola Salmas, Ilaria Bufalari, Chiara Begliomini, Luciano Fadiga (2009). The Motor Somatotopy of Speech Perception Current Biology DOI: 10.1016/j.cub.2009.01.017

Liberman, A.M., and Mattingly, I.G. (1985). The motor theory of speech perception revised. Cognition 21, 1-36.

*********************

Patti said...

Hi Greg,

I greatly enjoyed reading this paper, it was about time a paper like this appeared, especially after 10 years of people explaining everything in terms of mirror neurons as the solution to everything. However, I find that your review of the evidence against the motor theory of speech perception seems rather harsh. You state that the motor theory predicts that damage to the structures supporting production should damage perception and vice versa. And as no compelling evidence to this effect was found, you conclude that the motor theory is not true (in its strong form). But most of the lesion studies involve patiens with unilateral lesions, and it could very well be the case that only bilateral lesions lead to deficits in perception or production. (Note that I'm making the same argument as Rizzolatti & Craighero (2004) that you discuss on page 4.) It's been argued on many occasions that lesion studies cannot provide a conclusive answer for this type of argumentation. Maybe TMS is the answer here? Also, Galantucci et al also argue that, while the strongest claims of the motor theory are obviously false, it is undeniably the case that speech perception and production are linked. (Even though you state this as well at the bottom of page 11 right column). I don't think that there's any researcher in the speech field who still supports the motor theory in its strong form. Anyway, I can see your point and I believe you're right, I just think that it misrepresents the field to assume that anyone still believes in the motor theory as it was presented 50 years ago... (Now I'll read your TiCS paper ;-) )

Tuesday, February 24, 2009

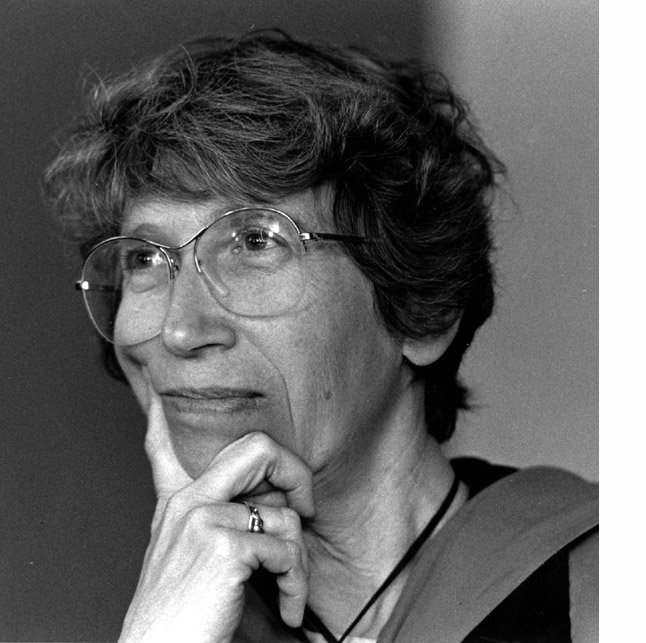

Sheila Blumstein is on a role! (Still)

Leave it to our good friend Sheila to make you feel like an unproductive schmuck. Sheila she been a major figure in the neuroscience of language for decades, doing seminal work on aphasia, speech perception, and more recently using fMRI to understand language organization in the brain. She also found the time to serve as Dean, Provost, and President at Brown University. I was Googling the "lexical effect" in speech sound perception, on which Sheila has done some relevant work in the past, and came across no less that six papers by Sheila and colleagues in 2008! To my disgrace, I had not seen any yet, but all look interesting. I've got some reading to do...

Leave it to our good friend Sheila to make you feel like an unproductive schmuck. Sheila she been a major figure in the neuroscience of language for decades, doing seminal work on aphasia, speech perception, and more recently using fMRI to understand language organization in the brain. She also found the time to serve as Dean, Provost, and President at Brown University. I was Googling the "lexical effect" in speech sound perception, on which Sheila has done some relevant work in the past, and came across no less that six papers by Sheila and colleagues in 2008! To my disgrace, I had not seen any yet, but all look interesting. I've got some reading to do...Congratulations Sheila!

Bilenko, N. Y., Grindrod, C. M., Myers, E. B., & Blumstein, S. E. (2008). Neural Correlates of Semantic Competition during Processing of Ambiguous Words. J Cogn Neurosci.

Grindrod, C. M., Bilenko, N. Y., Myers, E. B., & Blumstein, S. E. (2008). The role of the left inferior frontal gyrus in implicit semantic competition and selection: An event-related fMRI study. Brain Res, 1229, 167-178.

Hutchison, E. R., Blumstein, S. E., & Myers, E. B. (2008). An event-related fMRI investigation of voice-onset time discrimination. Neuroimage, 40(1), 342-352.

Myers, E. B., & Blumstein, S. E. (2008). The neural bases of the lexical effect: an fMRI investigation. Cereb Cortex, 18(2), 278-288.

Ruff, I., Blumstein, S. E., Myers, E. B., & Hutchison, E. (2008). Recruitment of anterior and posterior structures in lexical-semantic processing: an fMRI study comparing implicit and explicit tasks. Brain Lang, 105(1), 41-49.

Yee, E., Blumstein, S. E., & Sedivy, J. C. (2008). Lexical-semantic activation in Broca's and Wernicke's aphasia: evidence from eye movements. J Cogn Neurosci, 20(4), 592-612.

Friday, February 20, 2009

Lip reading involves two cortical mechansims

It is well known that visual speech (lip reading) affects auditory perception of speech. But how? There seem to be two ideas. One idea, dominant among sensory neuroscientists, is that visual speech accesses auditory speech systems via cross sensory integration. The STS is a favorite location in this respect. The other, dominant among speech scientists, particularly those with a motor theory bent, is that visual speech accesses motor representations of the perceived gestures which then influences perception.

A hot-off-the-press (well actually still in press) paper in Neuroscience Letters by Kai Okada and yours truly proposes that both ideas are correct. Specifically, that there are two routes by which visual speech can influence auditory speech, a "direct" and dominant cross sensory route involving the STS, and an "indirect" and less dominant sensory-motor route involving sensory-motor circuits. The goal of our paper was to outline existing evidence in favor of a two mechanism model, and to test one prediction of the model, namely that perceiving visual speech should activate speech related sensory-motor networks, including our favorite area, Spt.

Short version of our findings: as predicted, viewing speech gestures (baseline = non-speech gestures) activates speech-related sensory-motor areas including Spt as defined by a typical sensory-motor task (listen and reproduce speech). We interpret this as evidence for a sensory-motor route through which visual speech can influence heard speech, possibly via some sort of motor-to-sensory prediction mechanism. Viewing speech also activated a much broader set of regions along the STS, which may reflect the more direct cross sensory route.

Have a look and let me know what you think!

K OKADA, G HICKOK (2009). Two cortical mechanisms support the integration of visual and auditory speech: A hypothesis and preliminary data Neuroscience Letters DOI: 10.1016/j.neulet.2009.01.060

A hot-off-the-press (well actually still in press) paper in Neuroscience Letters by Kai Okada and yours truly proposes that both ideas are correct. Specifically, that there are two routes by which visual speech can influence auditory speech, a "direct" and dominant cross sensory route involving the STS, and an "indirect" and less dominant sensory-motor route involving sensory-motor circuits. The goal of our paper was to outline existing evidence in favor of a two mechanism model, and to test one prediction of the model, namely that perceiving visual speech should activate speech related sensory-motor networks, including our favorite area, Spt.

Short version of our findings: as predicted, viewing speech gestures (baseline = non-speech gestures) activates speech-related sensory-motor areas including Spt as defined by a typical sensory-motor task (listen and reproduce speech). We interpret this as evidence for a sensory-motor route through which visual speech can influence heard speech, possibly via some sort of motor-to-sensory prediction mechanism. Viewing speech also activated a much broader set of regions along the STS, which may reflect the more direct cross sensory route.

Have a look and let me know what you think!

K OKADA, G HICKOK (2009). Two cortical mechanisms support the integration of visual and auditory speech: A hypothesis and preliminary data Neuroscience Letters DOI: 10.1016/j.neulet.2009.01.060

Thursday, February 19, 2009

Postdoctoral Fellow and Research Assistant Positions - Georgetown University

THE BRAIN AND LANGUAGE LAB

The Brain and Language Lab at Georgetown University, directed by Michael Ullman, investigates the biological and psychological bases of first and second language in normal and disordered children and adults, and the relations between language and other cognitive domains, primarily memory, music and motor function. The lab's members test their hypotheses using a set of complementary behavioral, neurological, neuroimaging (ERP, MEG, fMRI) and other biological (genetic, endocrine, pharmacological) approaches. They are interested in the normal acquisition and processing of language and non-language functions, and their neurocognitive variability as a function of factors such as genotype, hormone levels, sex, handedness, age and learning environment; and in the breakdown, recovery and rehabilitation of language and non-language functions in a variety of disorders, including Specific Language Impairment, autism, ADHD, dyslexia, Tourette syndrome, schizophrenia, Alzheimer's disease, Parkinson's disease, Huntington's disease, and aphasia. For a fuller description of the Brain and Language Lab, please see http://brainlang.georgetown.edu.

RESEARCH ASSISTANT POSITION

We are seeking a full-time Research Assistant. The successful candidate, who will work with other RAs in the lab, will have the opportunity to be involved in a variety of projects, using a range of methodological approaches (see above). S/he will have responsibility for various aspects of research and laboratory management, including a number of the following, depending on aptitude and experience: creating experimental stimuli; designing experiments; running experiments on a variety of subject groups; performing statistical analyses; helping manage the lab's computers; managing undergraduate assistants; and working with the laboratory director and other lab members in preparing and managing grants and IRB protocols.

Minimum requirements for the position include a Bachelor's degree (a Master's degree is a plus), with a significant amount of course-work or research experience in at least two and ideally three of the following: cognitive psychology, neuroscience, linguistics, computer science, and statistics. Familiarity with Windows (and ideally Linux) is highly desirable, as is experience in programming or statistics and/or a strong math aptitude. The candidate must be extremely responsible, reliable, energetic, hard-working, organized, and efficient, and be able to work with a diverse group of people.

To allow for sufficient time to learn new skills and to be productive, candidates must be available to work for at least two years, and ideally for three. The successful candidate will be trained in a variety of the methods and approaches used in the lab, including (depending on the focus of his/her work) statistics, experimental design, subject testing, and neuroimaging methods. S/he will work closely with lab members as well as collaborators (see http://brainlang.georgetown.edu). The ideal start date would be early summer 2009. Interested candidates should email Ann McMahon (brainlangadmin@georgetown.edu) their CV and one or two publications or other writing samples, and have 3 recommenders email her their recommendations directly. Salary will be commensurate with experience and qualifications. The position, which includes health benefits, is contingent upon funding. Georgetown University is an Affirmative Action/Equal Opportunity employer.

POSTDOCTORAL FELLOW POSITION

The postdoctoral fellow will have the opportunity to be involved in a number of different projects, using a variety of methodological approaches (see above), and to carry out her/his own studies related to lab interests. The candidate must have completed all PhD degree requirements prior to starting the position. S/he must have significant experience in at least one and ideally two or more of the following areas: cognitive neuroscience, cognitive psychology, linguistics, computer science, statistics. Research experience in the neurocognition of language is desirable but not necessary, although the candidate must have a strong interest in this area of research. S/he must also have expertise in two or more of the following: ERPs, fMRI, MEG, adult-onset disorders, developmental disorders, psycholinguistic behavioral techniques, statistics, molecular techniques. Excellent skills at experimental design, statistics and writing, a strong publication record, and previous success at obtaining funding, will all be considered advantageous.

To allow for sufficient time to learn new skills and to publish, candidates must be available to work for at least two years, and ideally for three. The successful candidate will be trained in a variety of the methods and approaches used in the lab, including (depending on the focus of his/her work) aspects of experimental design, statistics, and neuroimaging methods. S/he will work closely with lab members as well as collaborators (see http://brainlang.georgetown.edu). The ideal start date would be summer 2009. Interested candidates should email Ann McMahon (brainlangadmin@georgetown.edu) their CV and two or three publications, and have 3 recommenders email her their recommendations directly. Salary will be commensurate with experience and qualifications. The position, which includes health benefits, is contingent upon funding. Georgetown University is an Affirmative Action/Equal Opportunity employer.

The Brain and Language Lab at Georgetown University, directed by Michael Ullman, investigates the biological and psychological bases of first and second language in normal and disordered children and adults, and the relations between language and other cognitive domains, primarily memory, music and motor function. The lab's members test their hypotheses using a set of complementary behavioral, neurological, neuroimaging (ERP, MEG, fMRI) and other biological (genetic, endocrine, pharmacological) approaches. They are interested in the normal acquisition and processing of language and non-language functions, and their neurocognitive variability as a function of factors such as genotype, hormone levels, sex, handedness, age and learning environment; and in the breakdown, recovery and rehabilitation of language and non-language functions in a variety of disorders, including Specific Language Impairment, autism, ADHD, dyslexia, Tourette syndrome, schizophrenia, Alzheimer's disease, Parkinson's disease, Huntington's disease, and aphasia. For a fuller description of the Brain and Language Lab, please see http://brainlang.georgetown.edu.

RESEARCH ASSISTANT POSITION

We are seeking a full-time Research Assistant. The successful candidate, who will work with other RAs in the lab, will have the opportunity to be involved in a variety of projects, using a range of methodological approaches (see above). S/he will have responsibility for various aspects of research and laboratory management, including a number of the following, depending on aptitude and experience: creating experimental stimuli; designing experiments; running experiments on a variety of subject groups; performing statistical analyses; helping manage the lab's computers; managing undergraduate assistants; and working with the laboratory director and other lab members in preparing and managing grants and IRB protocols.

Minimum requirements for the position include a Bachelor's degree (a Master's degree is a plus), with a significant amount of course-work or research experience in at least two and ideally three of the following: cognitive psychology, neuroscience, linguistics, computer science, and statistics. Familiarity with Windows (and ideally Linux) is highly desirable, as is experience in programming or statistics and/or a strong math aptitude. The candidate must be extremely responsible, reliable, energetic, hard-working, organized, and efficient, and be able to work with a diverse group of people.

To allow for sufficient time to learn new skills and to be productive, candidates must be available to work for at least two years, and ideally for three. The successful candidate will be trained in a variety of the methods and approaches used in the lab, including (depending on the focus of his/her work) statistics, experimental design, subject testing, and neuroimaging methods. S/he will work closely with lab members as well as collaborators (see http://brainlang.georgetown.edu). The ideal start date would be early summer 2009. Interested candidates should email Ann McMahon (brainlangadmin@georgetown.edu) their CV and one or two publications or other writing samples, and have 3 recommenders email her their recommendations directly. Salary will be commensurate with experience and qualifications. The position, which includes health benefits, is contingent upon funding. Georgetown University is an Affirmative Action/Equal Opportunity employer.

POSTDOCTORAL FELLOW POSITION

The postdoctoral fellow will have the opportunity to be involved in a number of different projects, using a variety of methodological approaches (see above), and to carry out her/his own studies related to lab interests. The candidate must have completed all PhD degree requirements prior to starting the position. S/he must have significant experience in at least one and ideally two or more of the following areas: cognitive neuroscience, cognitive psychology, linguistics, computer science, statistics. Research experience in the neurocognition of language is desirable but not necessary, although the candidate must have a strong interest in this area of research. S/he must also have expertise in two or more of the following: ERPs, fMRI, MEG, adult-onset disorders, developmental disorders, psycholinguistic behavioral techniques, statistics, molecular techniques. Excellent skills at experimental design, statistics and writing, a strong publication record, and previous success at obtaining funding, will all be considered advantageous.

To allow for sufficient time to learn new skills and to publish, candidates must be available to work for at least two years, and ideally for three. The successful candidate will be trained in a variety of the methods and approaches used in the lab, including (depending on the focus of his/her work) aspects of experimental design, statistics, and neuroimaging methods. S/he will work closely with lab members as well as collaborators (see http://brainlang.georgetown.edu). The ideal start date would be summer 2009. Interested candidates should email Ann McMahon (brainlangadmin@georgetown.edu) their CV and two or three publications, and have 3 recommenders email her their recommendations directly. Salary will be commensurate with experience and qualifications. The position, which includes health benefits, is contingent upon funding. Georgetown University is an Affirmative Action/Equal Opportunity employer.

Wednesday, February 18, 2009

Reflections on mirror neurons and speech perception

In the very first empirical report of mirror neurons di Pellegrino, Fadiga, Gallese, & Rizzolatti (1992) noted the surface similarity between mirror neurons and the motor theory of speech perception.

By 1992 the motor theory of speech perception was effectively dead in the world of speech science due to extensive empirical work that undermined many of its claims. However, the discovery of mirror neurons, their interpretation as supporting action understanding, and their suggested link to speech resuscitated the motor theory of speech perception -- at least among non-speech scientists.

So does the discovery of mirror neurons in fact provide any new support for the motor theory of speech perception? This is the question we (Andre Lotto, Greg Hickok, & Lori Holt) tackled in a Trends in Cognitive Science paper that has just recently become available as an e-pub. Short answer: no. See the abstract below for a slightly longer summary, and the paper for the details.

Abstract. The discovery of mirror neurons, a class of neurons that respond when a monkey performs an action and also when the monkey observes others producing the same action, has promoted a renaissance for the Motor Theory (MT) of speech perception. This is because mirror neurons seem to accomplish the same kind of one to one mapping between perception and action that MT theorizes to be the basis of human speech communication. However, this seeming correspondence is superficial, and there are theoretical and empirical reasons to temper enthusiasm about the explanatory role mirror neurons might have for speech perception. In fact, rather than providing support for MT, mirror neurons are actually inconsistent with the central tenets of MT.

References

G. Pellegrino, L. Fadiga, L. Fogassi, V. Gallese, G. Rizzolatti (1992). Understanding motor events: a neurophysiological study Experimental Brain Research, 91 (1) DOI: 10.1007/BF00230027

Andrew J. Lotto, Gregory S. Hickok, Lori L. Holt (2009). Reflections on mirror neurons and speech perception Trends in Cognitive Sciences DOI: 10.1016/j.tics.2008.11.008

[the invariance of the acoustic patterns of speech] led several authors to propose that the objects of speech perception were to be found not in the sound, but in the phonetic gesture of the speaker, represented in the brain as invariant motor commands (see Liberman and Mattingly 1985). Although our observations by no means prove motor theories of perception, nevertheless they indicate that in the premotor cortical areas there are neurons which are endowed with properties that such theories require. -di Pellegrino et al., 1992

By 1992 the motor theory of speech perception was effectively dead in the world of speech science due to extensive empirical work that undermined many of its claims. However, the discovery of mirror neurons, their interpretation as supporting action understanding, and their suggested link to speech resuscitated the motor theory of speech perception -- at least among non-speech scientists.

So does the discovery of mirror neurons in fact provide any new support for the motor theory of speech perception? This is the question we (Andre Lotto, Greg Hickok, & Lori Holt) tackled in a Trends in Cognitive Science paper that has just recently become available as an e-pub. Short answer: no. See the abstract below for a slightly longer summary, and the paper for the details.

Abstract. The discovery of mirror neurons, a class of neurons that respond when a monkey performs an action and also when the monkey observes others producing the same action, has promoted a renaissance for the Motor Theory (MT) of speech perception. This is because mirror neurons seem to accomplish the same kind of one to one mapping between perception and action that MT theorizes to be the basis of human speech communication. However, this seeming correspondence is superficial, and there are theoretical and empirical reasons to temper enthusiasm about the explanatory role mirror neurons might have for speech perception. In fact, rather than providing support for MT, mirror neurons are actually inconsistent with the central tenets of MT.

References

G. Pellegrino, L. Fadiga, L. Fogassi, V. Gallese, G. Rizzolatti (1992). Understanding motor events: a neurophysiological study Experimental Brain Research, 91 (1) DOI: 10.1007/BF00230027

Andrew J. Lotto, Gregory S. Hickok, Lori L. Holt (2009). Reflections on mirror neurons and speech perception Trends in Cognitive Sciences DOI: 10.1016/j.tics.2008.11.008

Tuesday, February 17, 2009

2009 CUNY Conference on Sentence Processing -- UC Davis

Via Matt Traxler & Tamara Swaab...

The schedule of talks and posters for the CUNY conference on sentence processing at UC Davis (March 26-28, 2009) is now available on the WEB:

http://cuny2009.cmb.ucdavis.edu/

Other information on this conference can be found on the same WEB-site.

The schedule of talks and posters for the CUNY conference on sentence processing at UC Davis (March 26-28, 2009) is now available on the WEB:

http://cuny2009.cmb.ucdavis.edu/

Other information on this conference can be found on the same WEB-site.

Wednesday, February 11, 2009

Area Spt in the planum temporale region: Sensory-motor integration or auditory imagery?

Area Spt is one of my favorite brain locations. We've been working on characterizing its response properties ever since we reported its existence in two papers back in 2001 and 2003 (Buchsbaum, Hickok, and Humphries, 2001; Hickok, Buchsbaum, Humphries, & Muftuler, 2003). Spt is located in the left posterior Sylvian region at the parietal-temporal boundary. The defining feature of Spt is that it activates both during the perception of speech and during (covert) speech production. Subsequent work has found that Spt is not speech-specific as it responds also during tonal melodic perception and production (humming), and that Spt is relatively selective for vocal tract gestures in that it responds more during perception and covert humming of tonal melodies than during perception and imagined piano playing of tonal melodies (Pa & Hickok, 2008) . On the basis of evidence like this, I have argued that Spt is a sensory-motor integration area for the vocal tract motor effector, just like monkey area LIP is a sensory-motor integration area for the eyes, and the parietal reach region (or area AIP) is a sensory-motor area for the manual effectors.

One nagging objection that has been raised more than once is this: "Isn't your 'motor' activity just auditory imagery?" That is, maybe during covert rehearsal there is some kind of motor-to-sensory discharge that serves to keep active sensory representations of speech in auditory cortex (i.e., Spt). Another possible objection that is less often raised is that the "sensory" activity we see in Spt isn't really sensory but is really motor preparation.

Just yesterday we got a paper accepted in the Journal of Neurophysiology that I think rules out these kinds of objections (Hickok, Okada, & Serences, in press). Here's the logic. If Spt really is just like other sensory-motor integration areas (e.g., LIP, AIP), it will be composed of a population of sensory-weighted cells, motor-weighted cells, and truly sensory-motor cells. Two things follow, (i) the BOLD response to combined sensory-motor stimulation should be greater than the BOLD response to either sensory or motor activation alone (because sensory-motor stimulation activates a larger cohort of cells than either sensory or motor alone), and (ii) the pattern of activity within Spt may be different during sensory activation than during motor activation (on the assumption that sensory and motor weighted cells are not perfectly distributed across the sampled voxels within Spt. If we can show that the response to sensory stimulation and motor stimulation are different, then Spt activity can't be all sensory or all motor; it must be sensory-motor.

Here's how we tested these predictions using fMRI. Subjects either listened to a 15s block of continuous speech (continuous listen), listened to 3s of speech and then rested for 12s (listen+rest), or listened to 3s of speech and then covertly rehearsed that speech for 12s (listen+rehearse):

First the BOLD results. Spt was identified separately in each subject by the subtraction, listen+rehearse minus listen+rest. This picks out areas that are more active during rehearsal than rest. Here's the BOLD activation for each condition in each subject's Spt ROI:

In the listen+rehearse condition, we predict that the BOLD response will be dominated by sensory stimulation during the first phase of the trial, will be a mix of sensory and rehearsal response during the middle phase of the trial (because the sensory response hasn't yet decayed while the rehearse response starts kicking in), and then will be dominated by the rehearsal response during the final phase of the trial. If you look at the listen+rehearse response curve compared to the continuous listen curve you can see how this prediction is born out: responses are equal in the first phase (because both conditions involve identical sensory stimulation), then activity in the continuous listen condition saturates and maintains roughly the same activity level until the end of the trial whereas activity in listen+rehearse continues to elevate (presumably because the sensory and motor-rehearsal responses are summing) then falls back down toward the end of the trial (presumably because the sensory signal is decayed). So, the BOLD predictions pan out.

Next we used pattern classification analysis to see if the pattern of the response in Spt was different during sensory stimulation versus motor activation. Amplitude information was removed from the data for this analysis. We trained a Support Vector Machine to classify the two conditions (continuous listen vs. listen+rehearse) on data from all but one run then tested its classification accuracy in the remaining run. This hold-one-out procedure was repeated until all runs had been classified. In addition, we performed this classification in three different time bins within the trial: early, middle, and late. The prediction is that classification accuracy should be maximal when the two conditions are maximally dominated by different signal sources, i.e., in the final time bin, and should be no better than chance in the first time bin when both signals are predominantly sensory. Here's what we found (blue lines represent upper and lower 5% boundaries for classification accuracies determined via a permutation test):

Classification accuracy for the continuous listen vs. listen+rehearse conditions was significantly above chance only in the last time bin, that is when the BOLD signal was maximally dominated by distinct input sources, one sensory the other motor. Notice too that at this time point the BOLD amplitude in these two signal are the same, which provides additional evidence that classification accuracy has nothing to do with amplitude.

If the pattern of activity in Spt is different during sensory stimulation compared to during motor stimulation (and independent of amplitude), then Spt activity can't be all sensory or all motor. This, together with the range of supporting evidence indicates that Spt is indeed a sensory-motor area.

References

Buchsbaum B, Hickok G, and Humphries C. Role of Left Posterior Superior Temporal Gyrus in Phonological Processing for Speech Perception and Production. Cognitive Science 25: 663-678, 2001.

Hickok G, Buchsbaum B, Humphries C, and Muftuler T. Auditory-motor interaction revealed by fMRI: Speech, music, and working memory in area Spt. Journal of Cognitive Neuroscience 15: 673-682, 2003.

Hickok, G., Okada, K., & Serences, J. (in press). Area Spt in the human planum temporale supports sensory-motor integration for the speech processing. Journal of Neurophysiology

Pa J, and Hickok G. A parietal-temporal sensory-motor integration area for the human vocal tract: Evidence from an fMRI study of skilled musicians. Neuropsychologia 46: 362-368, 2008.

One nagging objection that has been raised more than once is this: "Isn't your 'motor' activity just auditory imagery?" That is, maybe during covert rehearsal there is some kind of motor-to-sensory discharge that serves to keep active sensory representations of speech in auditory cortex (i.e., Spt). Another possible objection that is less often raised is that the "sensory" activity we see in Spt isn't really sensory but is really motor preparation.

Just yesterday we got a paper accepted in the Journal of Neurophysiology that I think rules out these kinds of objections (Hickok, Okada, & Serences, in press). Here's the logic. If Spt really is just like other sensory-motor integration areas (e.g., LIP, AIP), it will be composed of a population of sensory-weighted cells, motor-weighted cells, and truly sensory-motor cells. Two things follow, (i) the BOLD response to combined sensory-motor stimulation should be greater than the BOLD response to either sensory or motor activation alone (because sensory-motor stimulation activates a larger cohort of cells than either sensory or motor alone), and (ii) the pattern of activity within Spt may be different during sensory activation than during motor activation (on the assumption that sensory and motor weighted cells are not perfectly distributed across the sampled voxels within Spt. If we can show that the response to sensory stimulation and motor stimulation are different, then Spt activity can't be all sensory or all motor; it must be sensory-motor.

Here's how we tested these predictions using fMRI. Subjects either listened to a 15s block of continuous speech (continuous listen), listened to 3s of speech and then rested for 12s (listen+rest), or listened to 3s of speech and then covertly rehearsed that speech for 12s (listen+rehearse):

First the BOLD results. Spt was identified separately in each subject by the subtraction, listen+rehearse minus listen+rest. This picks out areas that are more active during rehearsal than rest. Here's the BOLD activation for each condition in each subject's Spt ROI:

In the listen+rehearse condition, we predict that the BOLD response will be dominated by sensory stimulation during the first phase of the trial, will be a mix of sensory and rehearsal response during the middle phase of the trial (because the sensory response hasn't yet decayed while the rehearse response starts kicking in), and then will be dominated by the rehearsal response during the final phase of the trial. If you look at the listen+rehearse response curve compared to the continuous listen curve you can see how this prediction is born out: responses are equal in the first phase (because both conditions involve identical sensory stimulation), then activity in the continuous listen condition saturates and maintains roughly the same activity level until the end of the trial whereas activity in listen+rehearse continues to elevate (presumably because the sensory and motor-rehearsal responses are summing) then falls back down toward the end of the trial (presumably because the sensory signal is decayed). So, the BOLD predictions pan out.

Next we used pattern classification analysis to see if the pattern of the response in Spt was different during sensory stimulation versus motor activation. Amplitude information was removed from the data for this analysis. We trained a Support Vector Machine to classify the two conditions (continuous listen vs. listen+rehearse) on data from all but one run then tested its classification accuracy in the remaining run. This hold-one-out procedure was repeated until all runs had been classified. In addition, we performed this classification in three different time bins within the trial: early, middle, and late. The prediction is that classification accuracy should be maximal when the two conditions are maximally dominated by different signal sources, i.e., in the final time bin, and should be no better than chance in the first time bin when both signals are predominantly sensory. Here's what we found (blue lines represent upper and lower 5% boundaries for classification accuracies determined via a permutation test):

Classification accuracy for the continuous listen vs. listen+rehearse conditions was significantly above chance only in the last time bin, that is when the BOLD signal was maximally dominated by distinct input sources, one sensory the other motor. Notice too that at this time point the BOLD amplitude in these two signal are the same, which provides additional evidence that classification accuracy has nothing to do with amplitude.

If the pattern of activity in Spt is different during sensory stimulation compared to during motor stimulation (and independent of amplitude), then Spt activity can't be all sensory or all motor. This, together with the range of supporting evidence indicates that Spt is indeed a sensory-motor area.

References

Buchsbaum B, Hickok G, and Humphries C. Role of Left Posterior Superior Temporal Gyrus in Phonological Processing for Speech Perception and Production. Cognitive Science 25: 663-678, 2001.

Hickok G, Buchsbaum B, Humphries C, and Muftuler T. Auditory-motor interaction revealed by fMRI: Speech, music, and working memory in area Spt. Journal of Cognitive Neuroscience 15: 673-682, 2003.

Hickok, G., Okada, K., & Serences, J. (in press). Area Spt in the human planum temporale supports sensory-motor integration for the speech processing. Journal of Neurophysiology

Pa J, and Hickok G. A parietal-temporal sensory-motor integration area for the human vocal tract: Evidence from an fMRI study of skilled musicians. Neuropsychologia 46: 362-368, 2008.

Tuesday, February 10, 2009

Eight problems for the mirror neuron theory of action understanding

My critical review of the mirror neuron theory of action understanding is now available in the early access section of the Journal of Cognitive Neuroscience website. The basic conclusion is that there is little or no evidence to support the mirror neuron=action understanding hypothesis and instead there is substantial evidence against it. I would love to hear your thoughts...

Wednesday, February 4, 2009

Naming difficulties in conduction aphasia

This is a follow up to a previous post on naming difficulties in conduction aphasia. In short, I was pointing out that I believe conduction aphasia results from damage to a sensory-motor integration circuit that is critical to speech, particularly under high phonological load conditions. I noted a problem for this idea in connection with the naming deficit often seen in conduction aphasia:

My suggestion in the previous post was that this may be explained in terms of a difference in the processes involved in naming versus repetition. I further speculated that part of the problem might be related to neighborhood density issues. Several very interesting and useful comments on that post, e.g., by Matt Goldrick, convinced me that my idea may not be all that solid.

Ok, enough back story. Here is another possible solution to the problem raised by Alfonso. It is actually one that I used to discuss in my talks, and may have even written about in print, but (duh) I seemed to have forgotten it until now (getting older sucks):

It seems likely that the lesion/deficit in conduction aphasia is not restricted to the sensory-motor network (i.e., area Spt), but also involves phonological processing/representation systems in the STG/STS. The lesions clearly involve these regions:

So perhaps the naming deficit in at least some conduction aphasics reflects damage to phonological systems in the left hemisphere (in addition to the load-dependent deficits caused by damage to Spt). The idea is that partial damage to phonological systems in the left hemisphere will disrupt phonological access during naming (which is harder and may be left dominant) more than recognition (which is easier and more bilaterally organized).

Thoughts?

One problem, raised by Alfonso Caramazza in the form of a question after a talk I gave, is that sometimes conduction aphasics get stuck on the simplest of words. Case in point, in my talk, I showed an example of such an aphasic who was trying to come up with the word cup. He showed the typical conduit d'approche, "its a tup, no it isn't... it's a top... no..." etc. Alfonso justifiably noted that conduction aphasics shouldn't have trouble with such simple words if the damaged sensory-motor circuit wasn't needed as critically in these cases.

My suggestion in the previous post was that this may be explained in terms of a difference in the processes involved in naming versus repetition. I further speculated that part of the problem might be related to neighborhood density issues. Several very interesting and useful comments on that post, e.g., by Matt Goldrick, convinced me that my idea may not be all that solid.

Ok, enough back story. Here is another possible solution to the problem raised by Alfonso. It is actually one that I used to discuss in my talks, and may have even written about in print, but (duh) I seemed to have forgotten it until now (getting older sucks):

It seems likely that the lesion/deficit in conduction aphasia is not restricted to the sensory-motor network (i.e., area Spt), but also involves phonological processing/representation systems in the STG/STS. The lesions clearly involve these regions:

patients with conduction aphasia generally had more posterior lesion that overlapped in the superior temporal gyrus and inferior parietal cortex (Baldo et al., 2008, Brain and Language, 105, 134-140).

So perhaps the naming deficit in at least some conduction aphasics reflects damage to phonological systems in the left hemisphere (in addition to the load-dependent deficits caused by damage to Spt). The idea is that partial damage to phonological systems in the left hemisphere will disrupt phonological access during naming (which is harder and may be left dominant) more than recognition (which is easier and more bilaterally organized).

Thoughts?

Subscribe to:

Posts (Atom)